This blog discusses the AWS services and tools that can help improve model performance and outcomes with relevance to AWS Certified Machine Learning Engineer-Associate (MLA-C01) certification. You can automate performance tracking, anomaly detection, and real-time debugging using these tools. Read through this blog to ensure the model remains accurate, reliable, and aligned with business goals, even in dynamic environments.

AWS Certified Machine Learning Engineer-Associate (MLA-C01) overview

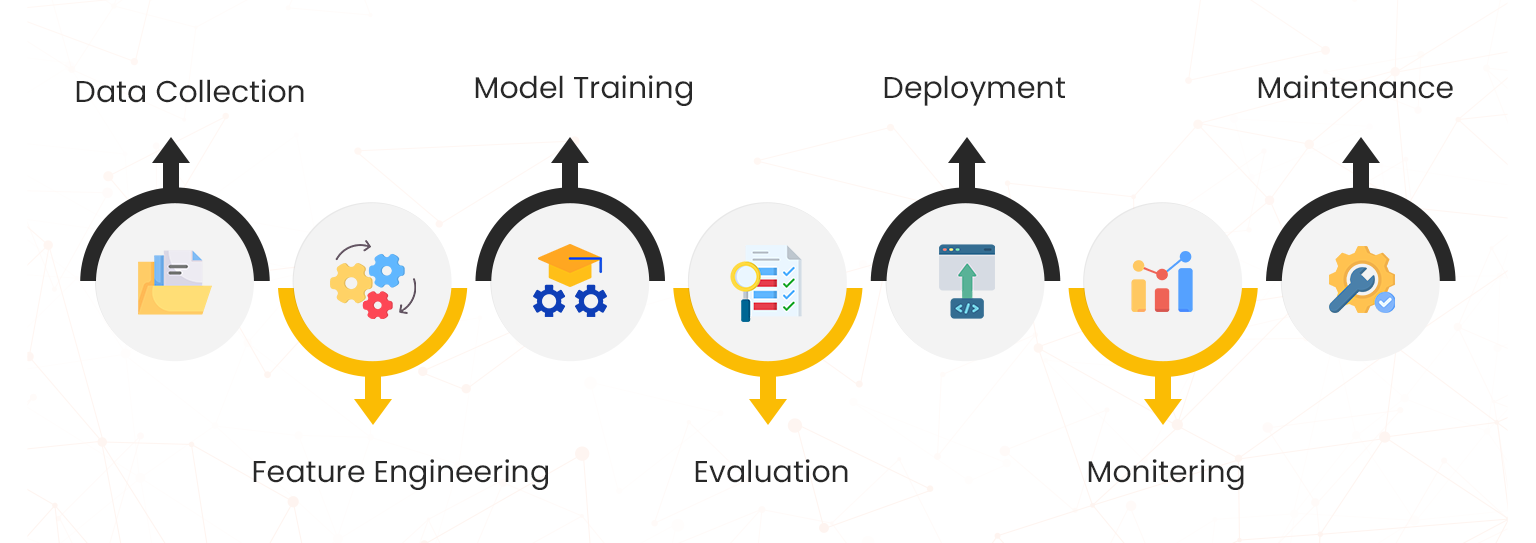

Launched in June 2024, the AWS Certified Machine Learning Engineer-Associate (MLA-C01) exam, is one of the newest AWS certifications that aligns with the evolving needs of the industry and ML engineers. The MLA-C01 exam checks your ability to create, deploy, and manage machine learning solutions and workflows on AWS. To take exam, you must have at least one year of experience in ML or a related field. A thorough knowledge of all stages of the ML lifecycle will help you pass the exam:

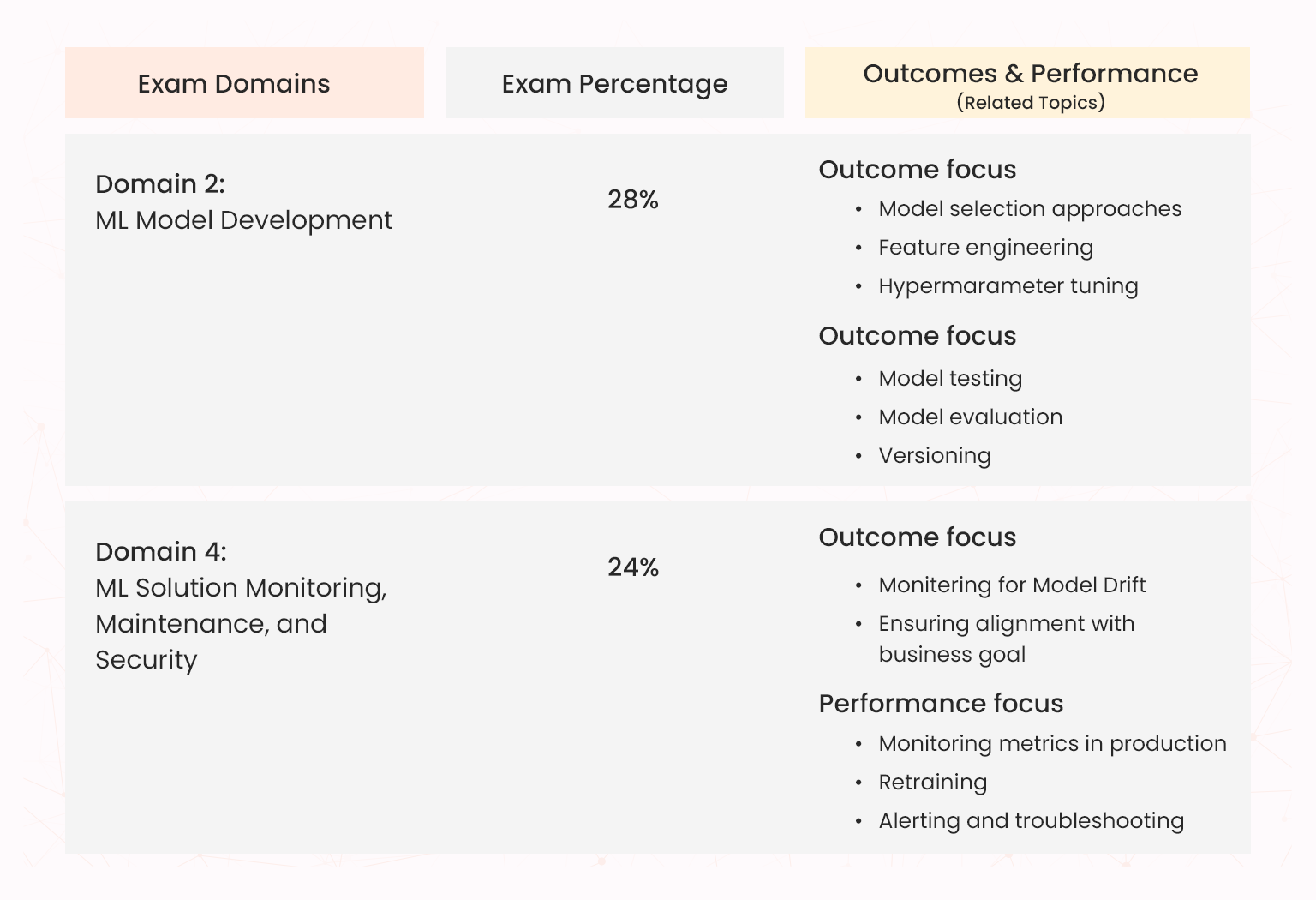

The primary focus of this exam is Amazon SageMaker (now renamed as Amazon SageMaker AI) and its different features and tools for MLOps. As an ML engineer, the course requires you to have more practical experience and delve deep into the settings and configurations of AWS ML services and tools. In this blog, we will focus on the best practices for evaluating and improving the performance of ML models using appropriate metrics. These best practices are predominantly covered in domains 2 and 4 of the exam guide and are based on AWS Well-Architected Machine ML Lens

The following table shows how evaluation and monitoring topics are covered in domains 2 and 4 in the AWS Certified Machine Learning Engineer-Associate exam course.

The exam questions are scenario-based, requiring you to think about which evaluation and monitoring metrics to use to measure the performance of a certain type of model.

Best Practices for Improving Model Outcomes with AWS

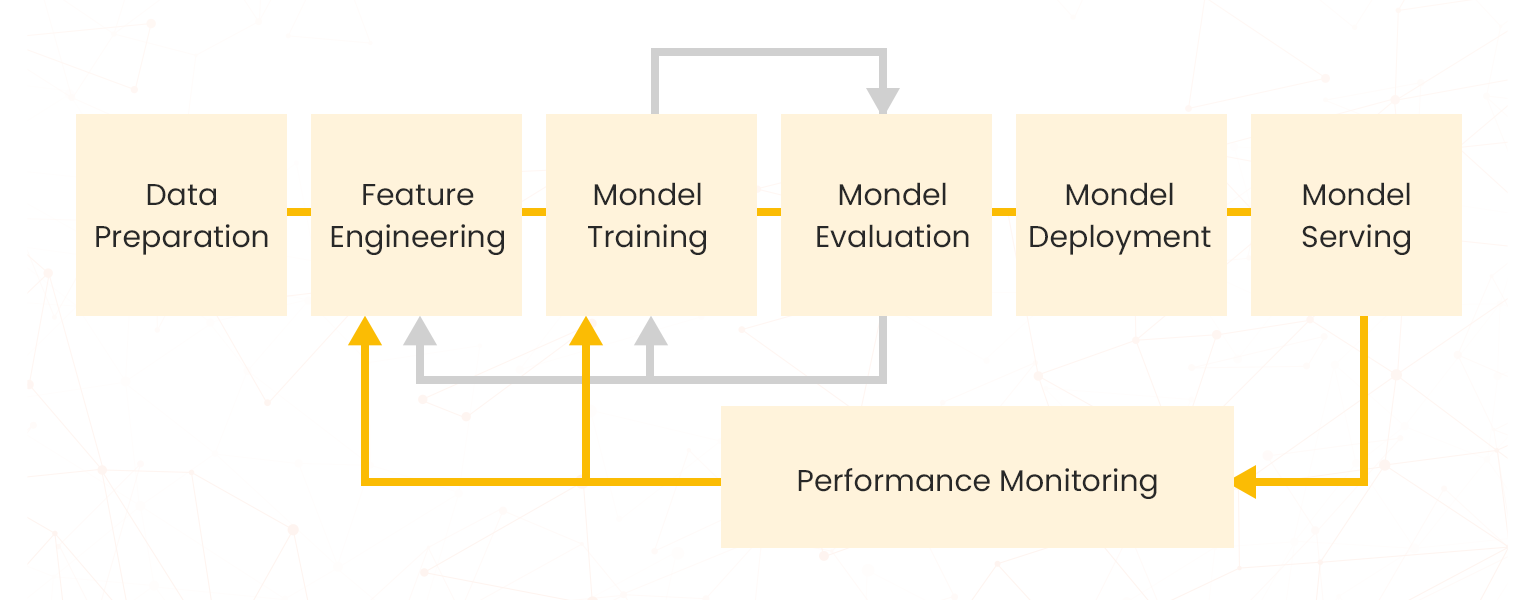

The ML lifecycle is an iterative process where each stage directly impacts the model’s outcomes. The lifecycle is the end-to-end process for developing, deploying, and maintaining ML models. Before developing the ML model, plan improvement drivers for optimizing model performance. Examples of improvement drivers include: collecting more data, cross-validation, feature engineering, tuning hyperparameters, and ensemble methods.

Here are some best practices to optimize the entire lifecycle to improve model outcomes:

- Gather high-quality data

- Automate ML for consistency

- Define relevant evaluation metrics

- Implement model monitoring

Gather high-quality data

ML models are only as good as the data that is used to train them. Ensure clean, well-labeled, and diverse data is used for training.

The following AWS services help you create high-quality quality:

- SageMaker Data Wrangler: explore and import data from a variety of popular sources and transform that data into a structured format within the a single pipeline. The 300 built-in data transformations enables you to normalize, transform, and combine features without having to write any code.

- SageMaker Ground Truth: combine manual and automated data labelling to produce accurate, high-quality training datasets

Detect, analyze, and alert

Monitor training progress and detect anomalies while training or retraining. AWS provides the following two tools:

- SageMaker Debugger: debug training jobs and resolve issues to improve the performance of your model. Send alerts when training anomalies are found, take actions against the issues, and identify the root cause of them by visualizing collected metrics.

- Amazon SageMaker with TensorBoard: use the visualization tools of TensorBoard in SageMaker to analyze the overall training progress and trends.

Automate ML for consistency

Manual ML is prone to error, inconstancy, and oversight, which can negatively impact model outcomes. You can use different SageMaker automation features to improve consistency and model quality, thereby delivering accurate outcomes.

- SageMaker Canvas: use a UI-based platform for no-code AutoML experience.

- SageMaker Autopilot: automate the end-to-end process of building, training, tuning, and deploying machine learning models.

- SageMaker JumpStart: use pretrained, open-source models for various problem types to help you get started with ML.

- MLflow with SageMaker: create, manage, analyze, and compare your machine learning experiments to gain insights to deploy your best-performing models

- SageMaker built-in algorithms, pre-trained models, and pre-built solution templates: trainand deploy machine learning models quickly.

Define relevant evaluation metrics

To evaluate how well a model performs on a given task, establish metrics that directly relate to the KPIs that are established in the business goal identification phase. Evaluate the metrics with consideration to the real-world use cases to maximize business value. However, selecting the right performance metric is crucial since different metrics highlight different aspects of model performance and different machine-learning tasks have different performance metrics. Different SageMaker tools provide built-in and customizable metrics for both supervised and unsupervised ML learning tasks. These metrics make it easier to evaluate different ML model, tasks, or iterations.

- SageMaker Clarify: evaluates ML models, detect bias, and explain model predictions. Clarify can be used in in pre-training data or post-training data that can emerge during training or when your model is in production.

- SageMaker Canvas: provides overview and scoring information for the different types of model..The Canvas leaderboard allows you to compare key performance metrics (for example, accuracy, precision, recall, and F1 score) to identify the best model for your data.

- SageMaker AutoPilot: produces metrics that measure the predictive quality of machine learning model candidates. SageMaker Autopilot Model Quality Reports generates reports of your model’s metrics to provide visibility into your model’s performance for regression and classification problems.

- Amazon Bedrock: evaluate the performance and effectiveness of Amazon Bedrock models and knowledge bases. Bedrock offers three evaluation methods: LLM-as-a-judge, programmatic, and human evaluation.

Implement model monitoring

After you’ve deployed the model, you must continuously monitor the performance of the models and their success in production. The model monitoring system must capture data, compare that data to the training set, define rules to detect issues, and send alerts. The issues detected in the monitoring phase include:

- Data quality

- Model quality

- Bias drift

- Feature attribution drift

Without timely correction, these issues can lead to performance degradation or serious compliance issues. Here are some examples of AWS services and tools for model monitor:

- SageMaker Debugger: get insights during training to identify and resolve issues.

- SageMaker Model Monitor: check monitoring reports on deployed models for data drift and performance degradation, with features to automatically retrain models if necessary. Model Monitor is integrated with Amazon SageMaker Clarify to help you identify potential bias drift

- Amazon CloudWatch: get metrics and alarms to monitor the performance of your ML models

- CloudWatch Logs: monitor logs generated by SageMaker endpoints, training jobs, and other infrastructure

- Amazon EventBridge: automate responses to events generated by your ML workflows

- AWS CloudTrail: track API calls and user activities across AWS ML workflows

AWS ML optimization tools and strategies

Pre-deployment evaluation and post-deployment monitoring are important strategies for model optimization which involves refining the model. The goal is to enhance the model’s accuracy, efficiency, and ability to generalize well to new data. After calculating the evaluation metrics, you can optimize the model by iterating the previous steps in ML lifecycle. AWS provides several tools and features for this iterative optimization process.

Optimization best practices

Here are some common optimization best practices to improve model outcomes.

- Retrain model

- Create tracking and version ocontrol mechanisms

- Tune hyperparameters

Retrain model

As machines’ operating modes and health change over time leading to data drift, models must be retrained to take more recent information such as data and labels. Use the following AWS tools:

- SageMaker AI Pipeline: create a retrain pipeline and automate

- AWS Step Functions: define all the steps in the retraining workflow and set up alerts to automate retraining. Step Functions integrates with EventBridge, allowing you to start an AWS Step Function workflow to initiate retraining tasks in the training pipeline.

Create tracking and version control mechanisms

Log and track your model so that if something goes wrong with a newly deployed version, you can roll back to the latest safe version. Use the following AWS tools:

- Amazon SageMaker AI Experiments: automatically tracks the inputs, parameters, configurations, and results of your iterations as runs

- Use SageMaker AI Model Registry: stores, manages, and tracks machine learning models

Tune hyperparameters

Hyperparameters can find the best combination of hyperparameters to increase model performance. You can use different hyperparameter tuning strategies with SageMaker.

- SageMaker AI automatic model tuning: finds the optimal model by running many training jobs on your dataset. It uses the algorithm and ranges of hyperparameters that you specify. It then chooses the hyperparameter values that result in a model that performs the best, as measured by a metric that you choose.

Final thoughts

Creating an ML model that delivers outcomes matching your needs involves a continuous, iterative process encompassing all phases of the ML lifecycle and workflows. Your first model might not even deliver accurate predictive results; you might have to try out a few more variations. The AWS Certified Machine Learning Engineer-Associate equips you with the skills to handle this iterative process to refine models until you achieve predictive accuracy and real-world performance. The exam evaluates your practical skills in implementing AWS performance tools. Sign up for the Whizlabs

AWS Certified Machine Learning Engineer Associate course to earn this certificate. For additional hands-on experience with the services, check AWS hands-on labs and AWS sandboxes.

- Tableau Data Analyst Salary and Job Trends 2025 - September 30, 2025

- Ultimate Java SE 21 1Z0-830 Preparation Guide for Beginners - September 30, 2025

- Best AWS Certification Courses in 2025 - August 22, 2025

- How to Pass the NVIDIA NCP-ADS Exam in 2025 - July 15, 2025

- Top 10 Topics to Master for the AI-900 Exam - July 10, 2025

- SC-401 Prep Guide: Become a Security Admin - June 28, 2025

- How Does AWS ML Associate Help Cloud Engineers Grow? - June 27, 2025

- Top 15 Must-Knows for AWS Solutions Architect Associate Exam - June 24, 2025