In this blog, let us deep-dive into different AI Key model deployment strategies like Amazon SageMaker, AWS Lambda, AWS Inferentia, Elastic Inference and more from the AWS services. Here, you will also understand the best practices to deploy efficient machine learning models, performance optimization, scalability, and cost-effectiveness.

AWS Certified AI Practitioner Certification—Overview:

The AWS Certified AI Practitioner (AIF-C01) certification is designed in such a way as to help professionals have a strong foundational understanding of Artificial Intelligence (AI), Machine Learning (ML), and Generative AI (GenAI).

As one of the major aspects of AI implementation on AWS is model deployment and how AI models are integrated into real-world applications, let us dive into AWS AI deployment strategies, services, and best practices to help you build expertise in the AI career.

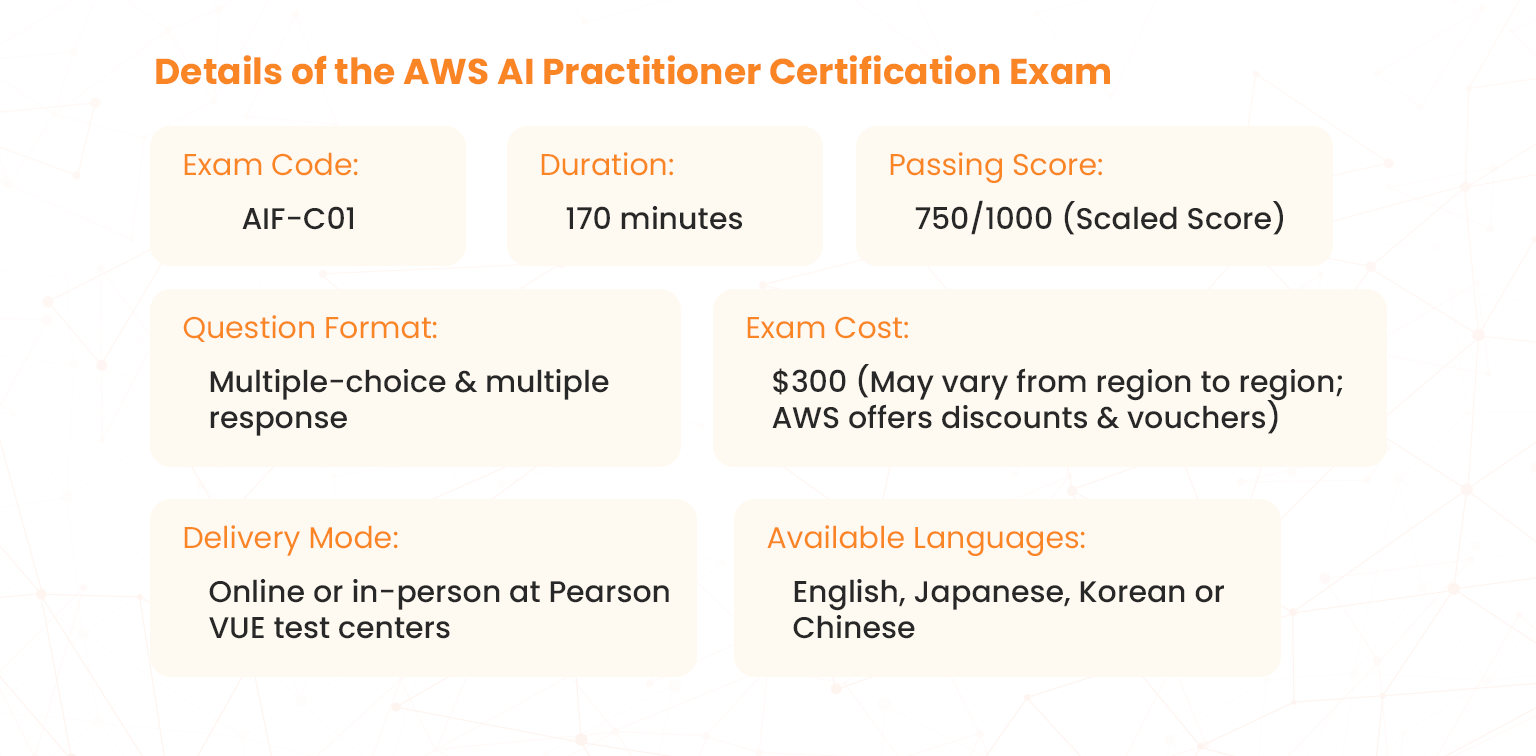

Details of the AWS AI Practitioner Certification Exam:

AWS AI Model Deployment

AWS provides you with numerous services for deploying AI models. With Amazon SageMaker being the most popular among all, it helps developers train, deploy, and manage various machine learning models along with AWS Lambda, Elastic Inference, and AWS Inferentia, which enables cost-effective and optimized AI inference deployments.

AWS AI model deployment strategies involve the following key elements:

- Model Training: Training models using SageMaker and storing them in Amazon S3.

- Model Hosting: Hosting trained models using SageMaker Endpoints or AWS Lambda for real-time inference.

- Scaling Models: Using auto-scaling and load balancing for optimized performance.

- Security & Monitoring: Implementing AWS Identity and Access Management (IAM) roles and monitoring model performance with Amazon CloudWatch.

Various Machine Learning Deployment Strategies

Organizations can incorporate the following deployment strategies that are crucial for AI model success.

- Batch Processing – Suitable for applications that do not require real-time predictions. Processed data is collected and analyzed in scheduled intervals. Ideal for tasks like document processing and offline recommendations.

- Online Inference – Provides real-time predictions, ensuring minimal latency. Used in applications like fraud detection and recommendation systems.

- Edge Deployment—Deploying models on edge devices reduces dependency on cloud resources and enhances privacy. This approach is popular in IoT and real-time decision-making applications.

- Hybrid Deployment – Combining cloud and edge processing for optimized performance and cost efficiency.

- Containerized Deployment – Using AWS Fargate or Amazon EKS for container-based deployments, enabling better portability and version control.

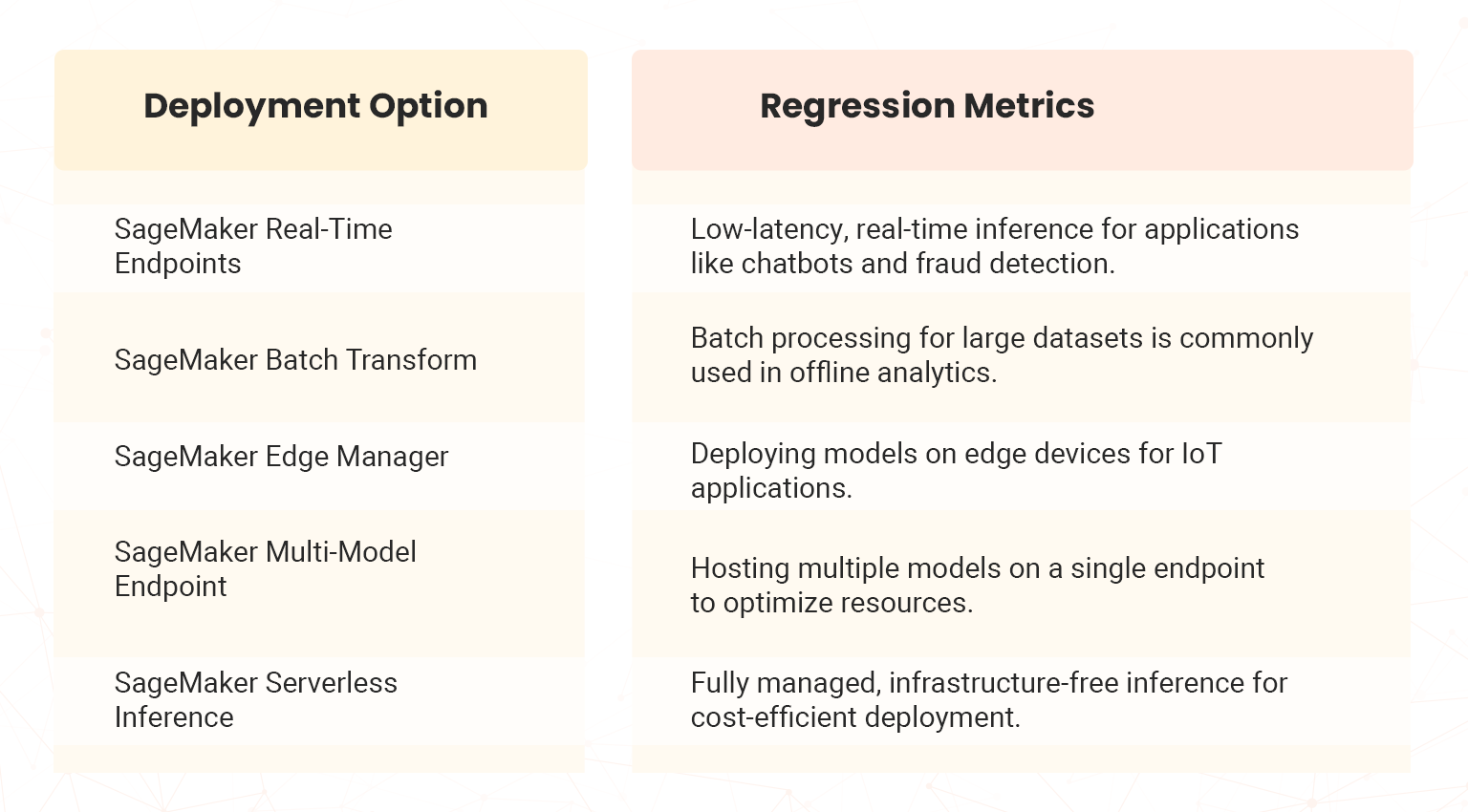

AWS SageMaker Deployment Options

Amazon SageMaker offers multiple deployment options tailored to different business needs:

Cloud-Based AI Model Hosting

AWS provides scalable cloud-based hosting solutions, ensuring high availability and security. Popular hosting solutions include:

- AWS Lambda for AI inference is the best serverless execution for lightweight AI models, which eliminates the need for dedicated infrastructure.

- Amazon Elastic Kubernetes Service (EKS) helps in managing AI workloads using Kubernetes. This ensures high scalability and portability.

- Amazon Elastic Inference enhances inference performance while reducing costs, allowing GPU acceleration.

- Amazon SageMaker Model Registry is a repository that helps manage multiple AI models and their versions for deployment efficiency.

Inference Optimization in AWS

In AI model deployment Inference optimization is a critical aspect that makes fast predictions, which eventually reduces the cost of computation. AWS offers a suite of tools and services designed to enhance inference efficiency, making AI workloads more scalable and pocket-friendly. The AWS inference optimization tools help organizations achieve high-performance, cost-efficient AI deployments, ensuring scalability and sustainability for their AI-driven applications.

- AWS Inferentia is a custom AI chip designed for high-speed inference, reducing infrastructure costs for large-scale deployments.

- Amazon Elastic Inference helps in optimizing cost efficiency by combining GPU acceleration with AI models when required.

- AWS Neuron SDK optimizes deep learning workloads on AWS Inferentia and improves performance on popular frameworks such as PyTorch and TensorFlow.

- AWS Deep Learning Containers are nothing but a pre-packaged environment for TensorFlow, PyTorch, and MXNet. They streamline AI model deployment.

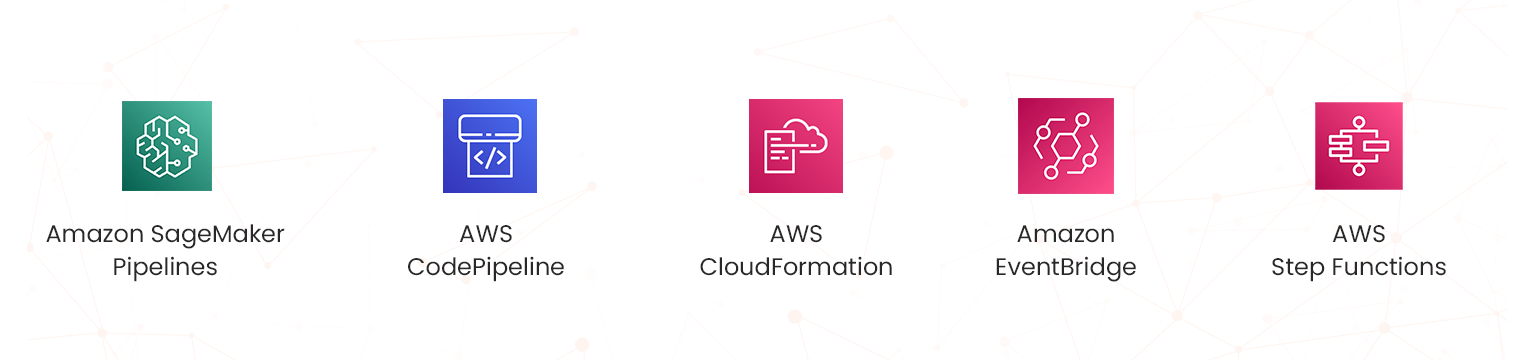

Continuous Integration for AI Models

Continuous integration and continuous deployment (CI/CD) ensure seamless updates and monitoring of AI models. AWS supports AI model CI/CD through:

- Amazon SageMaker Pipelines are responsible for ML workflow automation to streamline model training and deployment.

- AWS CodePipeline integrates with various CI/CD tools like Jenkins and GitHub Action and manages AI deployment workflows.

- AWS CloudFormation enables infrastructure as code (IaC) automation, ensuring consistent deployment across diverse environments.

- Amazon EventBridge helps in event-driven model update automation which in turn enables real-time AI model adaptation.

- AWS Step Functions orchestrate AI workflows to manage the preprocessing of data, training, and seamless deployment.

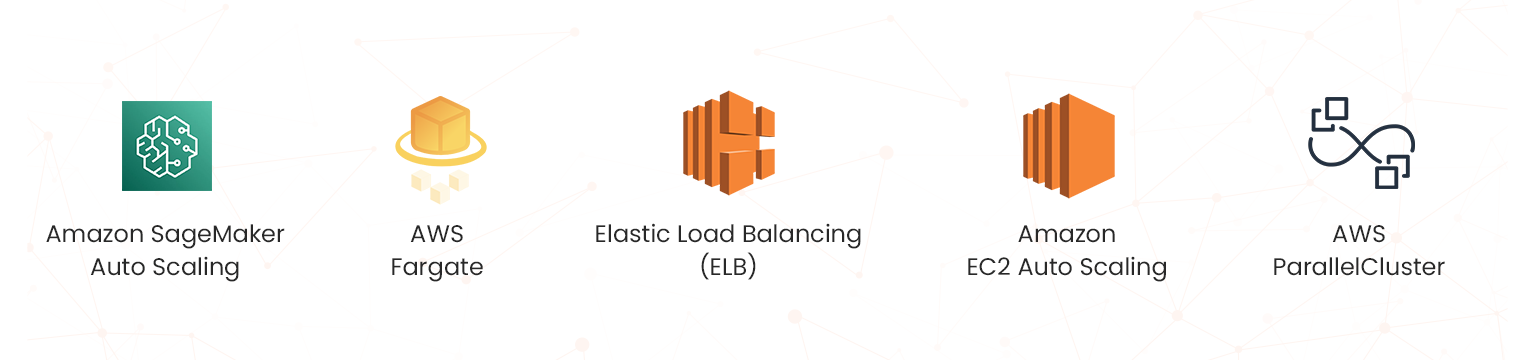

AI Model Scaling on AWS

With multiple AWS auto-scaling options provided below, AI models efficiently ensure optimal resource utilization.

- Amazon SageMaker Auto Scaling is a way to auto-adjust model endpoints based on demand.

- AWS Fargate is a serverless computer for AI model containerization. It eliminates the server management needs.

- Elastic Load Balancing (ELB) improves reliability and reduces latency through proper traffic distribution across multiple AI inference endpoints.

- Amazon EC2 Auto Scaling automatically adjusts compute instances based on the differing workloads that come into the applications.

- AWS ParallelCluster helps in inference for high-performance computing (HPC) workloads and optimizing AI model training.

What are the best practices for AI model deployment?

To achieve optimum performance, scalability, and cost-efficiency, a well-planned strategic method combined with the below best practices is important while deploying an AI model.

- Optimize Model Size

Reducing model complexity is necessary to boost inference speed, lower latency, and minimize resource utilization. To achieve this, follow the below:

* Use quantization to reduce the precision of model weights while maintaining accuracy.

* Apply pruning to eliminate redundant model parameters, improving efficiency.

* Enforce model distillation to transfer data from a large, complex model to a smaller, quicker model.

- Monitor Model Performance

As data patterns evolve, AI models may experience performance drift over time, and monitoring continuously these data can help detect and address the problems at the early stage. Here are some of the methods that help in data monitoring:

* Amazon CloudWatch provides real-time monitoring of model performance, which includes latency, error rate, and throughput.

* Amazon SageMaker Model Monitor automatically spots not just data drift but also various anomalies.

* AWS Lambda & EventBridge can trigger alerts based on performance metrics, enabling proactive interference.

- Ensure Security

Not just data but also AI models must be protected against unauthorized access and vulnerability:

* AWS Identity and Access Management (IAM) helps to enforce access control to the lowest layer possible.

* Encrypting data at rest and in transit using AWS Key Management Service (KMS) and TLS protocols also ensures security.

* VPC implementation and using security groups to isolate deployments and restrict external access.

- Use Cost-Effective Resources

Optimizing infrastructure selection helps balance performance and cost:

* Amazon EC2 Spot Instances usage is the cost-efficient AI model inference.

* Enforcing AWS SageMaker Multi-Model Endpoints to host multiple models on a single endpoint also reduces operational costs.

* Enabling auto-scaling helps reduce costs by dynamically adjusting resources based on demand.

- Implement Model Versioning

Tracking model versions ensures reproducibility, compliance, and rollback capabilities:

* Amazon SageMaker Model Registry helps manage different versions of deployed models.

* AWS CodeCommit & Git-based repositories facilitate collaborative model development and version tracking.

* Use model metadata tagging to document hyperparameters, datasets, and experiment results.

- Enable Logging and Debugging

Comprehensive logging and debugging improve transparency and troubleshooting:

* AWS X-Ray traces requests through AI applications to identify bottlenecks.

* Amazon CloudWatch Logs & AWS CloudTrail provide detailed logs for debugging and auditing.

* Enable model explainability tools, such as SHAP or Amazon SageMaker Clarify, to understand model predictions and biases.

- Automate Deployments

Automation minimizes human error and accelerates updates:

* AWS CodePipeline and AWS CodeDeploy usage for CI/CD integration ensures smooth model upgrades and patches.

* Containerize models with Amazon Elastic Kubernetes Service (EKS) or AWS Lambda, enabling flexible and scalable deployments.

* Automating the rollback methods helps to revert to previous model versions if there are failures.

Long-term success in AI-driven applications is ensured by implementing the above practices in the organization as efficiently and securely as possible.

Conclusion

Through the concepts explained in this blog, you can leverage SageMaker, AWS Lambda, and Kubernetes. Cloud-based hosting allows businesses to efficiently deploy AI solutions while maintaining high performance and cost efficiency. Through proper scaling, inference optimization, and CI/CD integration, AI models can be deployed effortlessly in several environments. Concentrating on these deployment strategies to ensure your certification and AI career success with hands-on labs and sandbox. Talk to our experts in case of queries!

- What are the Key Model Deployment Strategies for AIF-C01? - February 26, 2025

- How I Passed the AWS Solutions Architect Associate exam ( SA-C03) - September 9, 2024