This blog post dives into the world of Databricks Apache Spark, a powerful combination that empowers you to tame the big data beast.

We’ll explore what Apache Spark is, its core functionalities, and how Databricks provides a user-friendly platform to harness its potential. We’ll guide you through the benefits of using Databricks for Spark, from simplified cluster management to collaborative workflows.

You can clear the Databricks Certified Data Engineer Associate Exam easily if you have a clear understanding of how Databricks and Apache Spark handle your data and extract valuable insights from it.

Let’s get started!

Overview of Apache Spark in Databricks

Apache Spark is a powerful open-source unified analytics engine for large-scale data processing. Unlike traditional data processing methods that struggle with the volume, velocity, and variety of big data, Spark offers a faster and more versatile solution.

Apache Spark forms the core of the Databricks platform, driving the compute clusters and SQL warehouses with its advanced technology.

Apache Spark: The Big Data Engine

Spark is an open-source, unified analytics engine built for speed and scalability. It’s not a single tool, but rather a collection of components working together:

- Spark Core: The central nervous system, manages tasks, and memory, and ensures smooth operation.

- Spark SQL: Lets you interact with structured data using familiar SQL queries, simplifying data exploration.

- Spark Streaming: Processes data streams in real-time, allowing you to analyze information as it arrives.

- MLlib: A library packed with machine learning algorithms for building and deploying models on big data.

- GraphX: Analyzes graph data, useful for network analysis and social network exploration.

- Spark R: Integrates R programming with Spark’s capabilities, letting R users leverage Spark’s power.

Databricks: The Spark Powerhouse

While Spark is the engine, Databricks provides the ideal platform to run it. Databricks is a cloud-based platform specifically optimized for Apache Spark. Here’s what makes it so powerful:

- Simplified Spark Deployment: Forget complex cluster management. Databricks handles setting up and scaling Spark clusters with a few clicks.

- Interactive Workflows: Databricks notebooks provide an interactive environment for data exploration, visualization, and development using Spark functionalities.

- Collaboration Made Easy: Databricks fosters teamwork by allowing seamless sharing of notebooks, clusters, and results amongst colleagues.

- Integrated Tools: Databricks offers a rich ecosystem of data management, warehousing, and machine learning tools that seamlessly integrate with Spark.

How does Apache Spark operate within the Databricks platform?

One of the primary ways Apache Spark operates within Databricks is through its support for multiple programming languages, such as Scala, Python, R, and SQL. This language flexibility allows users to leverage their preferred programming language for data processing and analysis tasks.

While the core technical aspects are important, understanding the bigger picture is crucial. Let’s delve deeper into how Apache Spark operates within the Databricks platform, transforming it from a technical process into a user-friendly experience.

For example: You have a massive, complex dataset waiting to be analyzed.

Here’s how Spark and Databricks work together to unlock its secrets:

Data Onboarding: The journey begins with data. You can bring your data into Databricks from various sources: cloud storage platforms like S3 or Azure Blob Storage, relational databases, or even streaming data feeds. Databricks provides connectors and tools to simplify this process.

Spark Cluster: Ready, Set, Go! When you request a Spark job within Databricks, it takes care of the heavy lifting behind the scenes. Databricks automatically provisions a Spark cluster with the necessary resources (CPUs, memory) based on your data’s size and the complexity of your analysis. Think of this cluster as a team of high-performance computers working together to tackle your data challenge.

Spark Job Submission: Once the cluster is up and running, your Spark code (written in Scala, Python, R, or Java) is submitted to the cluster. This code outlines the specific tasks you want Spark to perform on your data, like filtering, aggregating, or building a machine-learning model.

Spark the Master Conductor: Spark, acting as the conductor of this data orchestra, takes your code and breaks it down into smaller, more manageable tasks. It then distributes these tasks across the available nodes in the cluster. This parallel processing approach allows Spark to handle large datasets efficiently, significantly reducing processing time compared to traditional single-computer methods.

Parallel Processing Power: Each node in the cluster becomes a worker bee, diligently executing its assigned tasks on your data. Spark leverages in-memory processing whenever possible, further accelerating data manipulation compared to traditional disk-based approaches. Imagine multiple computers working simultaneously on different parts of your data, significantly speeding up the analysis process.

Results and Cleanup: Once the processing is complete, Spark gathers the results from each node and combines them to form the final output. This output could be a summarized dataset, a machine learning model, or any other insights you designed your Spark job to generate. Databricks then returns these results to your workspace, where you can access and analyze them.

Automatic Cluster Termination: Finally, Databricks automatically terminates the Spark cluster once the job is finished. This ensures efficient resource utilization and avoids unnecessary costs. Think of it as the team of computers disbanding after completing their task, freeing up resources for other jobs.

Databricks: Simplifying the Spark Experience

What truly sets Databricks apart is its user-friendly approach to Spark. Here’s how Databricks streamlines the process:

- No Cluster Management Headaches: Databricks eliminates the need to manually configure and manage Spark clusters. You can focus on your data analysis tasks and leave the infrastructure management to Databricks.

- Interactive Workflows: Databricks notebooks provide an interactive environment for working with Spark. You can write your Spark code, visualize data with ease, and collaborate with colleagues, all within a single interface.

- Integrated Ecosystem: Databricks offers a rich set of tools that seamlessly integrate with Spark. You can manage your data using Databricks SQL, build data pipelines with Delta Lake, and deploy machine learning models using Databricks ML – all within the same platform. This eliminates the need to switch between different tools and simplifies the entire data science workflow.

Is it possible to utilize Databricks without incorporating Apache Spark?

Databricks offers a diverse range of workloads and incorporates open-source libraries within its Databricks Runtime. While Databricks SQL leverages Apache Spark in its backend operations, end-users employ standard SQL syntax to generate and interrogate database entities.

Users can schedule various workloads through workflows, directing them toward computing resources provisioned and managed by Databricks.

You can definitely utilize Databricks without directly using Apache Spark!

- Databricks SQL: As Databricks SQL operates on top of Spark, allowing users to query structured data using familiar SQL syntax. This eliminates the need to write complex Spark code for basic data analysis.

- Open-Source Libraries: Databricks Runtime includes various open-source libraries beyond Spark. Data scientists can use libraries like TensorFlow and Scikit-learn for machine learning tasks without relying solely on Spark MLlib.

- Data Management and Workflows: Databricks offers tools for data management (e.g., Delta Lake) and workflow scheduling. These functionalities are independent of Spark and cater to data organization and task automation.

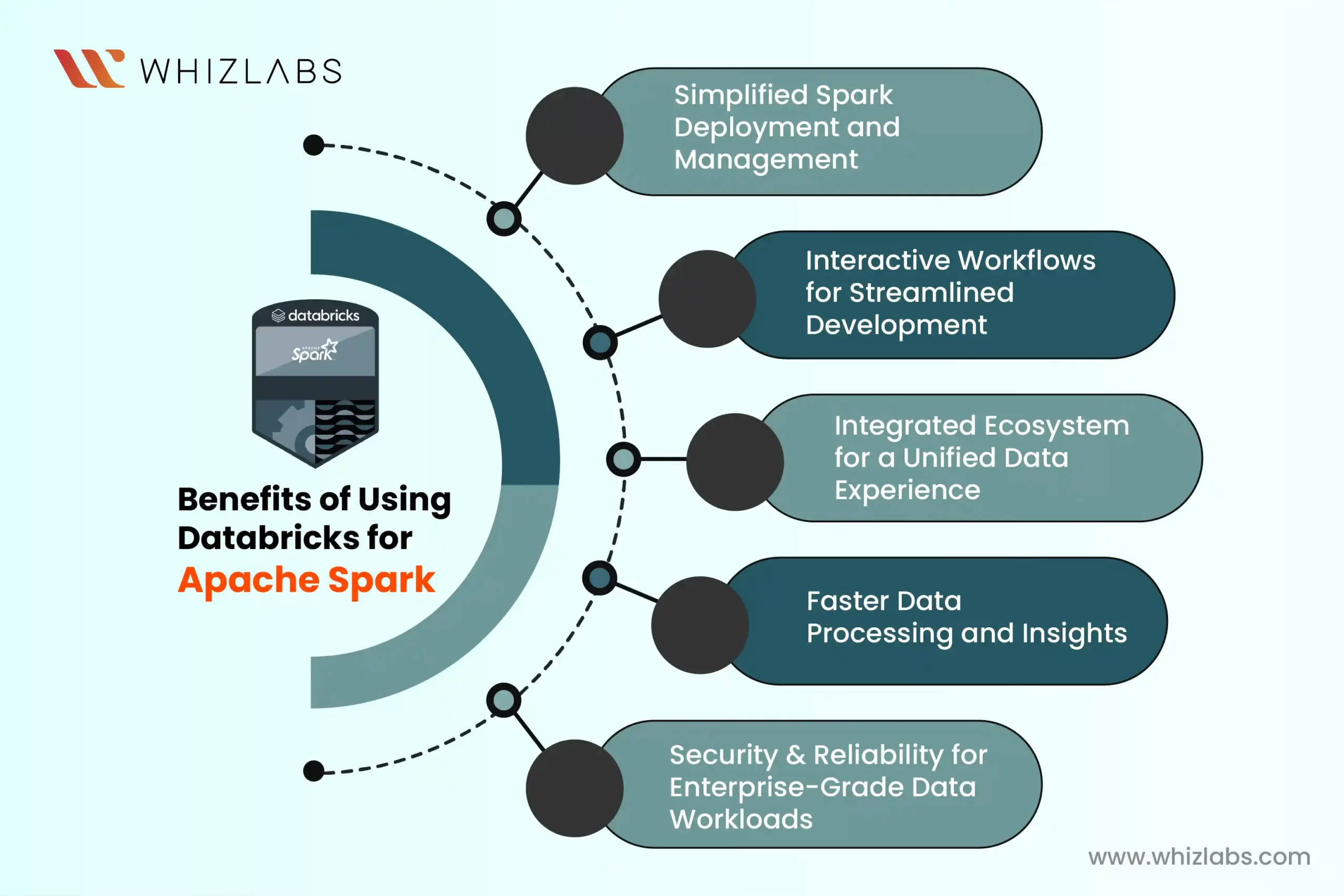

Benefits of Using Databricks for Apache Spark

The benefits of utilizing Databricks for Apache Spark are mixed and bound to various aspects of data processing, analytics, and machine learning. Here are some key benefits:

Simplified Spark Deployment and Management

- No More Cluster Headaches: Forget the complexities of setting up and managing Spark clusters yourself. Databricks eliminates this burden by automatically provisioning and scaling Spark clusters based on your requirements. With just a few clicks, you can have a Spark cluster ready to tackle your data challenges. This allows you to focus on your data analysis tasks and leave the infrastructure management to Databricks.

- Elasticity at Your Fingertips: Databricks offers elastic compute clusters. You can easily scale your clusters up or down based on the size and complexity of your Spark jobs. This ensures you’re only paying for the resources you actually use, maximizing cost-efficiency.

Interactive Workflows for Streamlined Development

- Databricks Notebooks: Your Spark Playground: Databricks notebooks provide an interactive environment specifically designed for working with Spark. You can write Spark code, visualize data with libraries like Plotly or Matplotlib, and debug your code, all within a single interface. This simplifies the development process and allows for rapid iteration as you analyze your data.

- Collaboration Made Easy: Databricks fosters teamwork by allowing seamless sharing of notebooks, clusters, and results amongst colleagues. Data scientists and analysts can collaborate effectively, share insights, and iterate on Spark models efficiently. This collaborative environment accelerates the data analysis process and promotes knowledge sharing within your team.

Integrated Ecosystem for a Unified Data Experience

- Beyond Spark: A Richer Data Toolkit: Databricks offers a comprehensive suite of tools that seamlessly integrate with Spark. You can manage your data using Databricks SQL, build data pipelines with Delta Lake, and deploy machine learning models using Databricks ML – all within the same platform. This eliminates the need to switch between different tools and data platforms, streamlining your workflow from data ingestion to analysis and deployment.

- Seamless Data Ingestion and Management: Databricks simplifies data ingestion from various sources, including cloud storage platforms, databases, and streaming data feeds. You can leverage built-in connectors and tools to easily bring your data into the platform for analysis with Spark.

Additionally, Delta Lake provides a reliable and scalable data storage solution within Databricks, ensuring data integrity and facilitating efficient data management.

Faster Data Processing and Insights

- The Power of In-Memory Processing: Spark’s in-memory processing capabilities significantly accelerate data manipulation compared to traditional disk-based approaches. By leveraging in-memory computations whenever possible, Databricks allows you to analyze large datasets in a fraction of the time. This translates into faster insights and quicker data-driven decision making.

- Parallel Processing for Scalability: Spark distributes complex Spark jobs across multiple nodes in the cluster, enabling parallel processing. This approach allows you to handle massive datasets efficiently and reduces processing time significantly. Databricks, with its automatic cluster management, ensures optimal resource utilization for your Spark jobs, further accelerating data processing.

Security and Reliability for Enterprise-Grade Data Workloads:

- Secure Data Management: Databricks prioritizes data security. It offers robust access controls, encryption capabilities, and audit trails to ensure the security and privacy of your sensitive data. This is crucial for enterprises working with confidential information.

- Reliable Infrastructure for Business Continuity: Databricks runs on a highly reliable cloud infrastructure, offering high availability and disaster recovery capabilities. This ensures minimal downtime and protects your data analysis workflows from disruptions.

By simplifying cluster management, providing an interactive development environment, and offering a rich ecosystem of integrated tools, Databricks empowers data professionals to extract valuable insights from big data efficiently and collaboratively.

The Future of Databricks Apache Spark

As data volumes continue to grow, the capabilities of Databricks and Spark will undoubtedly evolve. Here are some exciting trends to watch for:

- Streamlined Machine Learning: Building and deploying machine learning models will become easier with tools like Databricks ML.

- Real-time Analytics: Spark Streaming’s capabilities for real-time data processing will continue to improve, opening doors for more real-time applications.

- Cloud-Native Advancements: Databricks will likely leverage advancements in cloud computing to optimize Spark performance and scalability within the cloud environment.

By harnessing the power of Databricks and Apache Spark, organizations across industries can unlock the hidden potential within their data, gain valuable insights, and make data-driven decisions that drive success in the ever-evolving big data landscape.

Check out our Databricks Certified Data Engineer Associate Study Guide to excel in your certification journey!

Conclusion

I hope this blog post has found you well and provided a comprehensive introduction to Apache Spark in Databricks. This powerful duo offers a compelling solution for organizations of all sizes to navigate the ever-growing realm of big data.

By leveraging Spark’s processing muscle and Databricks user-friendly platform, you can unlock valuable insights from your data, optimize operations, and make data-driven decisions that drive success.

Whether you’re a seasoned data scientist or just starting your big data exploration, Databricks and Spark offer the tools and capabilities to transform your data into a strategic asset.

- 7 Pro Tips for Managing and Reducing Datadog Costs - June 24, 2024

- Become an NVIDIA Certified Associate in Generative AI and LLMs - June 12, 2024

- What is Azure Data Factory? - June 5, 2024

- An Introduction to Databricks Apache Spark - May 24, 2024

- What is Microsoft Fabric? - May 16, 2024

- Which Kubernetes Certification is Right for You? - April 10, 2024

- Top 5 Topics to Prepare for the CKA Certification Exam - April 8, 2024

- 7 Databricks Certifications: Which One Should I Choose? - April 8, 2024