Google BigQuery, a serverless data warehouse solution, is designed to be both cost-effective and highly scalable, empowering businesses to swiftly and efficiently analyze extensive datasets.

Furthermore, it incorporates a set of SQL functions that prove to be invaluable in simplifying the handling of typical business scenarios.

In this article, you will be guided through BigQuery SQL functions and gain insights on how to create basic SQL functions in BigQuery in real-time settings.

Let’s dig in!

What is BigQuery?

Google’s enterprise data warehouse, BigQuery, was created to democratize large-scale data analysis. This platform is specifically designed to manage extensive datasets, handling tasks such as analyzing log data from numerous retail systems or processing IoT data generated by millions of vehicle sensors globally.

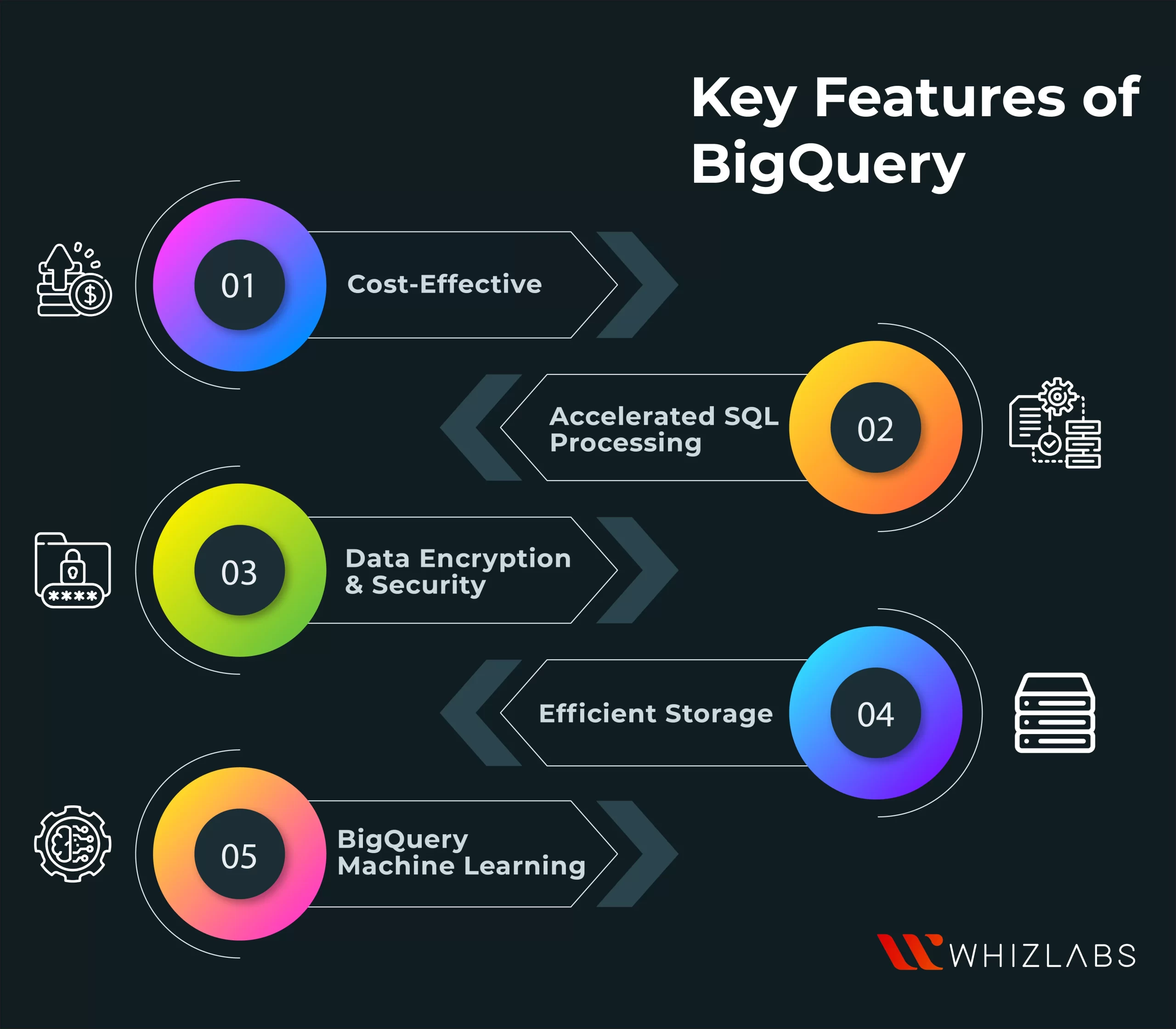

Key Features of BigQuery

BigQuery incorporates contemporary features that not only boost productivity but also prioritize security and integrity.

- Cost-Effective: BigQuery presents a reasonable pricing model, particularly for beginners who can utilize certain operations within the free tier. Charges apply for specific operations and the use of BigQuery storage APIs, with pricing based on two components: Analysis and Storage.

- Accelerated SQL Processing: BigQuery supports ANSI SQL techniques and employs a second sub-query to enhance concurrency, resulting in higher throughput. The utilization of ANSI SQL also contributes to faster response times.

- Data Encryption & Security: Given that BigQuery operates in the cloud, there is inherent concern about data and personal information security. To address this, the platform encrypts data and integrates with Cloud Identity and Access Management for enhanced security.

- Efficient Storage: BigQuery excels in data storage efficiency. Once data is loaded into BigQuery, the platform automatically processes it, streamlining the storage process.

- BigQuery Machine Learning: The concept of BigQuery Machine Learning empowers data scientists and analysts to undertake various tasks, including model building, training, and testing. Using SQL query syntax, users can directly retrieve results from BigQuery.

Also Read : Google BigQuery and Its Role in Big Data Analytics

What are basic SQL functions in BigQuery?

Here are some basic SQL Functions that exist in BigQuery:

1) Aggregation Functions

Aggregation functions are frequently utilized in SQL, combining all rows within a group into a singular value. Here are some commonly used aggregation functions:

| Aggregate Functions | Description |

| COUNT() | Represent a number of rows in a table or view. |

| SUM() | Calculate the sum of all values. |

| AVG() | Calculate the average of a set of values. |

| MIN() | Represent the minimum value. |

| MAX() | Represent the maximum value. |

2) Window (Analytical) Functions

Window functions in SQL evaluate values for a specific window or set of rows within a table, as opposed to the entire table. The OVER() clause is used to define the window for these functions. Here are some commonly used window functions:

| Window Function | Description |

| RANK() | Assigns a unique rank to each record based on a specified set of criteria. |

| ROW_NUMBER() | Assigns a distinct row number to each record. |

| NTILE() | Determines the percentile to which a specific row belongs. |

| LAG() | Retrieves data from the preceding row within the same result set without requiring SQL joins. |

| LEAD() | Retrieves data from the next row within the same result set without necessitating SQL joins. |

3) String Functions

String functions in SQL are valuable for manipulating and handling text fields. They are particularly useful when you need to modify text, format it, or concatenate values from different columns.

Here are some commonly used string functions:

| String Function | Description |

| CONCAT() | Joins two words or strings together. |

| CONCAT_WS() | Joins two words or strings together using a specified symbol as a concatenating symbol. |

| FORMAT() | Displays a number in a specified format. |

| RTRIM() | Separates the given substring from the rest of the string. |

| SUBSTR() | Extracts a substring from a string at a certain position. |

| REVERSE() | Reverses a string. |

4) Date Functions

When working with dates in your dataset, date functions become valuable for various operations. Here are some commonly used date functions:

| Date Function | Description |

| DATE() | Creates a DATE field from integer values representing the year, month, and day. |

| PARSE_DATE() | Creates a DATE object from a string representation of a date. |

| DATE_DIFF() | Counts the number of days, weeks, months, or years that have elapsed between two dates. |

| CURRENT_DATE() | Returns the current date in the specified or default timezone. |

| FORMAT_DATE() | Formats the DATE field to the given format string. |

How to access the Whizlabs hands-on labs for doing BigQuery Operations?

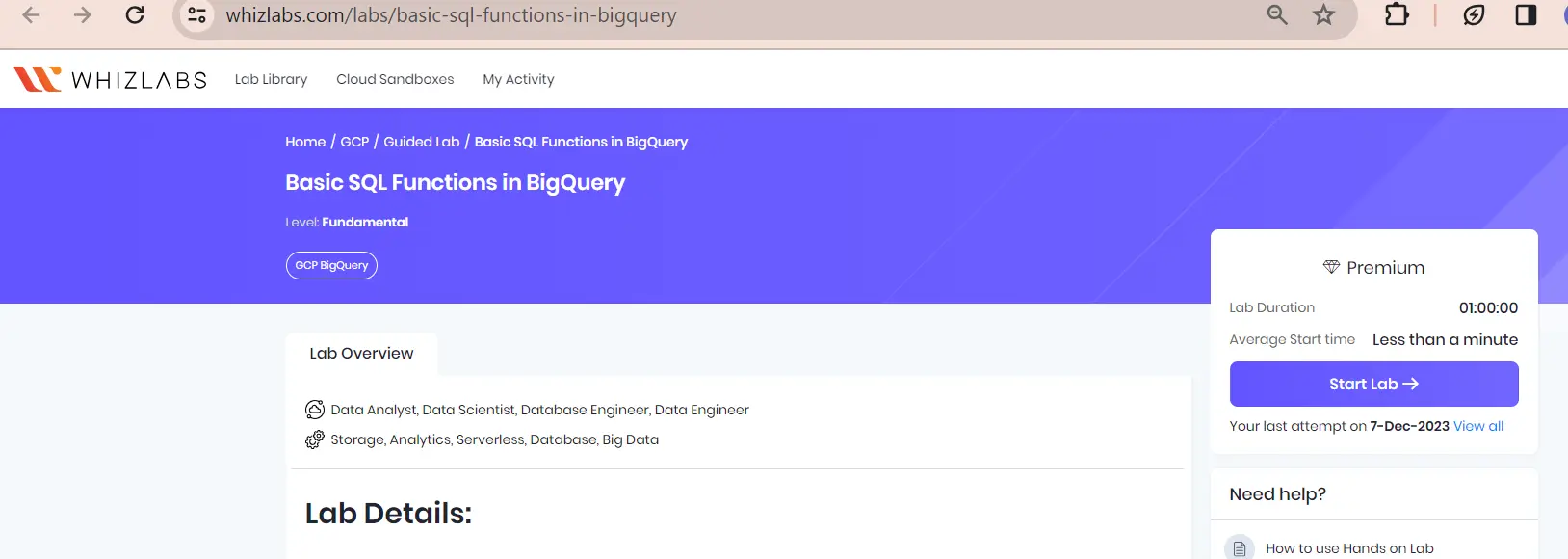

In the Whizlabs hands-on labs, click on Basic SQL Functions in BigQuery labs. Once you find the desired labs page, follow the steps as outlined in the lab task.

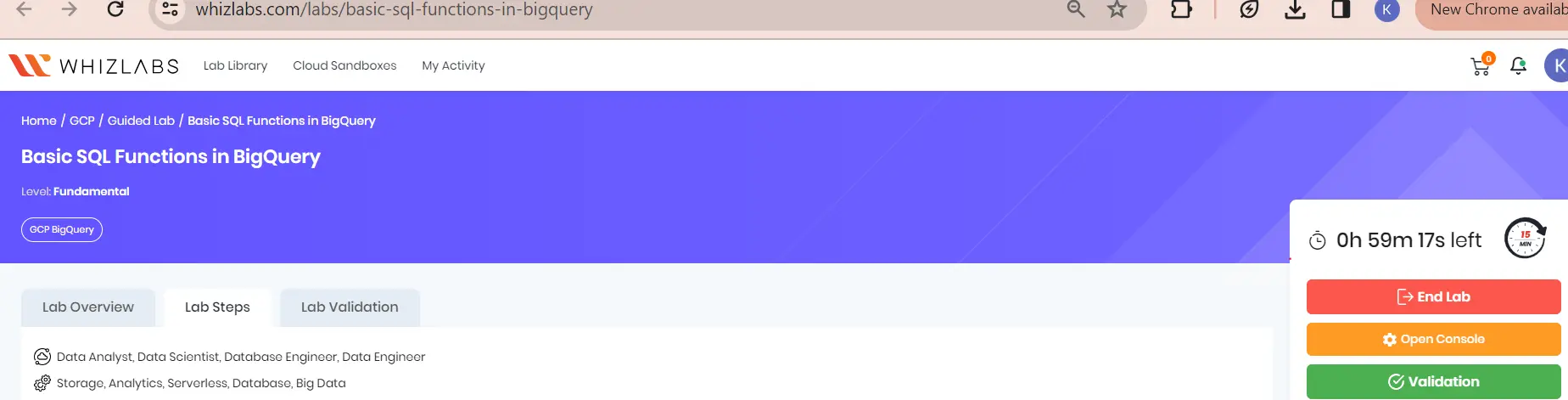

Start the Lab Environment

Click the “Start Lab” button situated in the right sidebar of the labs page. This action will set up a dedicated GCP (Google Cloud Platform) environment, configuring all the necessary resources and permissions specific to the lab’s requirements.

Access the Google Sign-In Page

Once the lab setup is finalized, the “Open Console” button will become active. Proceed to click the “Open Console” button, which will direct you to the Google Sign-In page. It’s advisable to use the Incognito or private browsing mode in your web browser to ensure a seamless sign-in process and avoid potential issues.

Retrieve and Utilize Lab Credentials

-

- Return to the Lab Document or instructional guide within Whizlabs.

- You’ll find a section named “Lab Credentials.”

- Within this section, there will be a highlighted Email and Password designated for console access.

- Use the provided “Copy” option next to the credentials to conveniently copy them.

- Head back to the Google Sign-In page and paste (or input) the copied Email and Password to securely sign in to the GCP console.

Creating a BigQuery Dataset

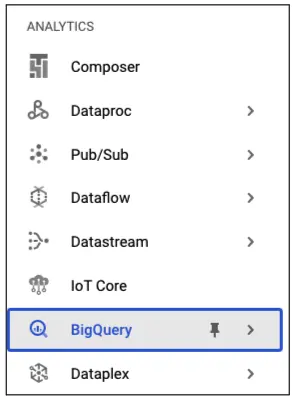

To create a BigQuery dataset, start by clicking on the hamburger icon in the top left corner, then navigate to BigQuery under the Analytics section.

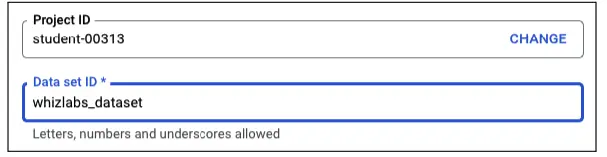

Now, select the kebab icon on the Project ID and click Create Dataset.

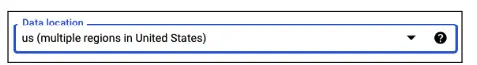

Set the Dataset ID as “whizlabs_dataset” and choose the location as “us(multiple regions in the United States).

After creating the dataset, download a file for future steps.

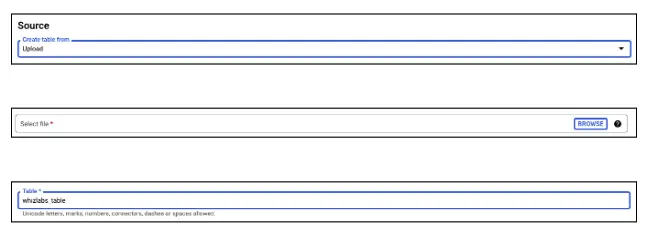

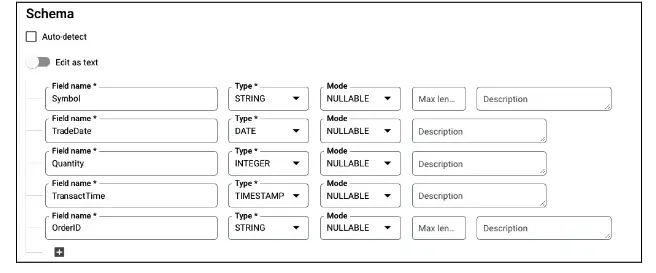

To create a table within the dataset, click on the kebab icon for the dataset, then select “Create Table.” Choose the upload option under Source, browse to upload the downloaded file, and name the table “whizlabs_table.”

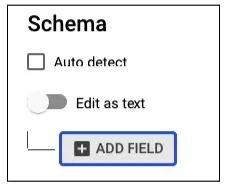

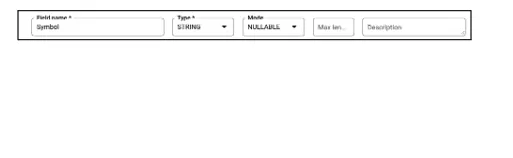

Under Schema Click on Add Field.

Enter the details: Field name as Symbol, Type as String, and left other options as default.

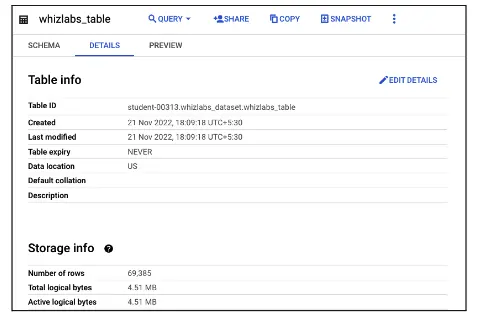

After creating the table successfully, click on the table to learn more details about the table.

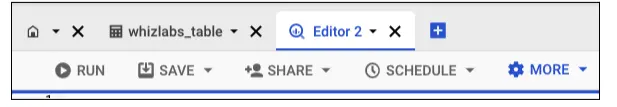

Querying the dataset

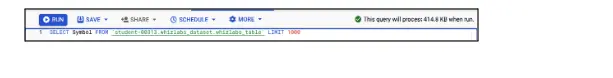

For querying the dataset, go to the Editor section, enter the queries, and click on Run.

BigQuery has advanced capabilities such as an inbuilt validator to validate the syntax and errors in your query.

Another great function of BigQuery is that it tells about the number of Bytes it has to read to derive the output. Note: In BigQuery you are billed based on bytes read and storage.

Let’s run our first query using the SELECT, FROM, WHERE, and LIMIT clauses, copy and paste the below query and click on Run. Replace <Project-ID> with the Project ID mentioned in the login panel, <Dataset-ID> with the Dataset that you created above, and <Table-ID> with the name of the table that you noted before.

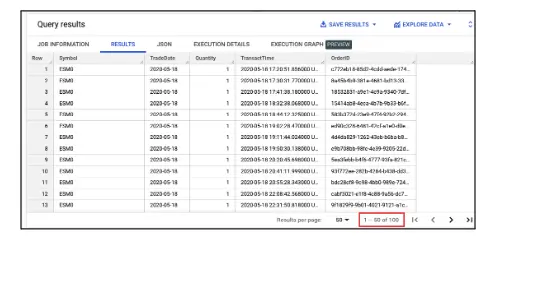

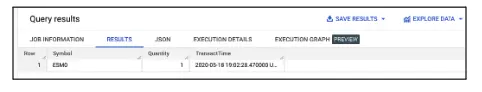

You will receive output like the one below. Note: It returned only 100 rows as defined in the LIMIT.

Run the Query on specific Columns using the SELECT, FROM, and WHERE clauses, copy and paste the below query and click on Run. Replace <Project-ID> with the Project ID mentioned in the login panel, <Dataset-ID> with the Dataset that you created above, and <Table-ID> with the name of the table that you noted before.

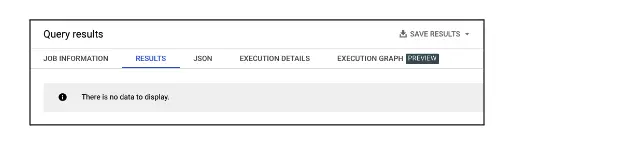

After executing the query, you’ll observe results where the OrderID could be either 123 or 3b93b000-9214-4159-b6f6-5dba5921e80a. Since there is no OrderID with the value 123, a row with the OrderID as the other one is returned.

Now, let’s use the AND operator instead of OR. Copy and paste the following query, replacing <Project-ID> with the Project ID from the login panel, <Dataset-ID> with the created Dataset, and <Table-ID> with the noted table name.

Upon running this query, you will receive an output indicating that no rows match both conditions.

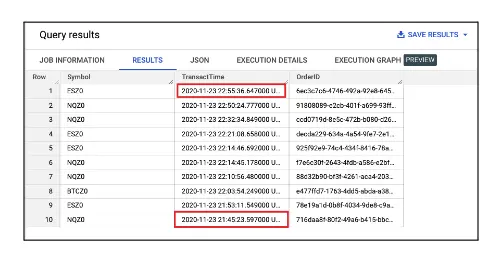

To sort the output, use the ORDER BY keyword in the following query. Copy and paste it, replacing <Project-ID>, <Dataset-ID>, and <Table-ID> with the appropriate values.

The result will display sorted data based on the specified column, in this case, TransactTime, in descending order.

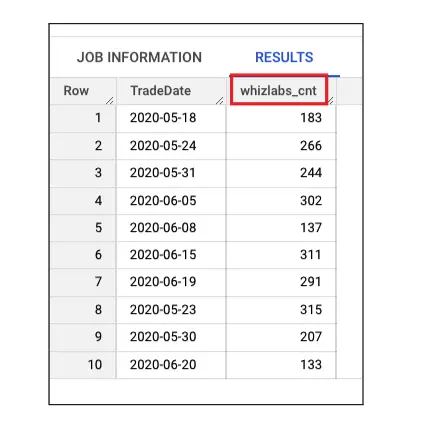

Execute the provided query after replacing the placeholders <Project-ID> with the relevant Project ID from the login panel, <Dataset-ID> with the Dataset created earlier, and <Table-ID> with the noted name of the table. The query groups entries based on similar TradeDate values. It’s important to note that the name “f0_” is assigned by BigQuery unless an alias is specified in our query.

To assign a custom name to our output column using the AS keyword, execute the provided query after replacing the placeholders: <Project-ID> with the relevant Project ID from the login panel, <Dataset-ID> with the previously created Dataset, and <Table-ID> with the noted name of the table. Then, click on Run.

The output you receive will look similar to the example below. It’s worth noting that the column name is now more readable.

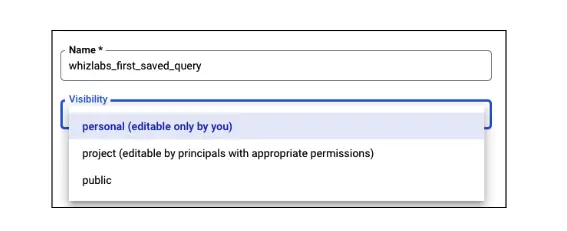

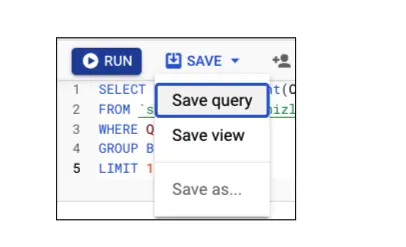

BigQuery is equipped with advanced features, including the ability to save queries. Simply click on the Save icon located in the top panel and select “Save query.”

Enter any name for the query and set the visibility to Private for now.

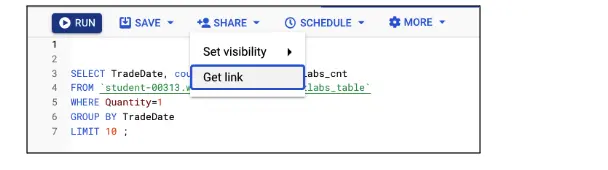

After saving it, you can share it using a link. Click on the Share icon from the top panel and select Get Link.

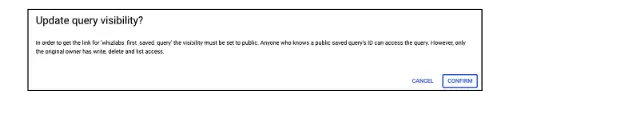

A Pop-up will show up, Click on Confirm and you will get a link in return and you can share publicly.

Execute the provided query after replacing the placeholders: <Project-ID> with the relevant Project ID from the login panel, <Dataset-ID> with the previously created Dataset, and <Table-ID> with the noted name of the table. After running the query, the output will indicate that no count of OrderID can be more than 1.

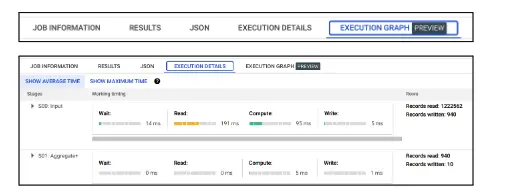

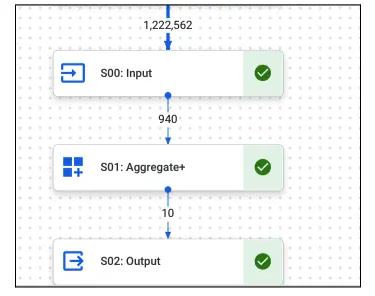

You can examine how the process unfolded in the backend by clicking on “Execution Details”

The Execution Details are shown in a diagram under the Execution Graph section.

Limitations of using the BigQuery functions

Let’s examine some challenges you may encounter when working with User Defined Functions (UDFs):

Limitations with Document-Oriented Objects

UDFs that require Document-Oriented objects, such as Windows, Node, and Document User Defined Functions, may face limitations as these objects may not be supported. This can impact the functionality of certain UDFs that rely on these specific object types.

Dependency on Native Code in JavaScript Functions

JavaScript Functions within UDFs that depend on native code may encounter failures. Dependencies on specific native code components can lead to compatibility issues, affecting the execution and reliability of the UDFs.

Case Sensitivity Constraints

UDFs operate in a case-sensitive manner. This means that they distinguish between uppercase and lowercase letters. While this can be advantageous in some scenarios, it also imposes limitations on applicability rates and the usage of quotes, requiring careful consideration to avoid potential issues.

Understanding these challenges is crucial for effectively leveraging User Defined Functions and ensuring their seamless integration into your applications.

FAQs

Is BigQuery and SQL the same?

No, BigQuery and SQL are not the same. They have some differences: Google BigQuery allocates computing resources automatically when you need them. It auto-scales up and down based on the data loaded. On the other hand, SQL Server doesn’t have an auto-scalable option, and hence it needs human intervention to scale up and down based upon the data loads.

Difference between Google Bigquery and MySQL.

| Aspect | Google BigQuery | MySQL |

| Scalability and Performance | Designed for massive datasets, scalable to petabytes | More suitable for smaller to medium-sized workloads, may face performance challenges with extremely large datasets. |

| Data Organization | Columnar storage model for efficient analysis | Row-based storage model for fast read and write access, may limit performance for complex analytical queries. |

| Query Language | BigQuery SQL with extensions for complex querying | Traditional SQL with MySQL-specific extensions |

| Data Processing Paradigm | Serverless computing, automatic resource management | Requires manual management of resources and optimization |

| Advanced Analytical Capabilities | Built-in machine learning, geographic functions, integration with GCP services | Capable of handling complex queries; may require additional plugins for advanced analytics. |

| Cost Structure | Pay-as-you-go based on data processed and stored | Typically licensed based on subscription or usage, including server capacity and features utilized. |

What type of database is Google BigQuery?

Google BigQuery is categorized as a business intelligence and online analytical processing (OLAP) system. It is a hybrid solution that incorporates SQL dialects and is built upon Google’s internal column-based data processing technology known as “Dremel.”

Know More : What is BigQuery?

Conclusion

Hope this article provides an introduction to Google BigQuery, offering insights into its features. It comprehensively covers various aspects related to BigQuery Functions, including their types, components, and limitations.

It also includes practical examples to demonstrate the usage of User-Defined BigQuery Functions, providing you with a real-time experience.

To make your hands dirty by exploring any other Google Cloud platforms, try our Google hands-on labs and Google Sandboxes.

- Basic SQL Functions in BigQuery | Guided Labs - December 29, 2023