In today’s fast-paced digital landscape, organizations need a robust and scalable platform to store, manage, and analyze their ever-growing data. Snowflake emerges as a shining star, offering a cloud-based data warehousing solution that transcends traditional limitations.

But how do you harness the true potential of Snowflake and build a data warehouse that can handle the demands of modern analytics? It is quite possible by following some best practices to build snowflake data warehouses.

From architecture considerations to performance optimization techniques, we will equip you with the knowledge and insights needed to construct a Snowflake data warehouse that stands tall amidst the ever-changing data landscape.

In this blog post, we will embark on a journey through the best practices for designing and building Snowflake data warehouses.

So, let’s embrace the snowflake revolution!

Snowflake Data Warehouse – An Overview

A relational database called a data warehouse (DW) is built for analytical work instead of operational use. It gathers and combines data from a single or a variety of sources so that it may be examined and used to generate business insights. For all or some of the data sets gathered by an organization’s operational systems, it acts as a federated repository.

Data warehousing serves two important purposes. The information and data required by the business, which can originate from a variety of sources, are initially integrated into it as a historical repository. Second, it acts as the database’s SQL query execution as well as a processing engine, allowing users to communicate with the data that is kept inside.

Data mistakes and gaps are invariably caused by a transactional database that has been regularly halted. As a result, a data warehouse acts as an independent platform for analytical jobs across these many sources after data aggregation. This separation of responsibilities enables databases to continuously concentrate on only transactional tasks.

The most adaptable data warehousing system on the market can be obtained for the smallest initial expenditure due to the elasticity of storage and computation combined with the pay-as-you-go model of cloud-based services.

Best practices for designing and building Snowflake data warehouses

While designing modernized cloud database warehouses, having an outline of IT and business needs will be the key to successful deployment. Knowing the requirements and business structure can lead to the successful implementation of data warehousing.

Before initiating the design and development process, let’s know the best practices to be followed for achieving the desired implementation process.

Also Read : Snowflake Certifications – Which snowflake certification is best for you?

Stakeholder empowerment

The data warehouse’s data preparation procedures should enable all stakeholders to collaborate and complete their tasks more quickly and easily:

To accelerate time to value and uncover new areas for insights, data analysts must investigate, organize, clean up, blend, combine, and validate the quality of the data using data that is nearest to the source.

Data scientists work with business leaders to identify the analytical insights that promote innovation and help the company to achieve its goals. They do data exploration, analytics, modeling, and algorithm development on a variety of data sources and formats.

Data engineers must automate data-related processes in order to handle more of them. They develop, oversee, and oversee data architecture and procedures that facilitate analytics and data science.

All stakeholders can benefit from using a Snowflake data warehouse to quickly prepare the data and get it into the appropriate schema for data warehousing. This can be done through the use of a data preparation solution that provides self-service abilities, visual instruction, and AI-driven suggestions for data transformation.

Self-Service during Governance

Data preparation based on self-service is essential. Solutions are required that enable non-technical users to explore, profile, organize, clean, enhance, and perform manual data preparation tasks in a Snowflake data warehouse without relying on constrained IT resources.

There are three methods to achieve that and they are:

- Data silos should be prevented from growing as users gather data extracts and do their own preparation procedures, frequently using spreadsheets.

- To handle data definitions and information, use central shared catalogs or glossaries, and update data warehousing schemas as needed.

- Track and record the history of the information during the preparation and alter it.

Assure Data Quality at Scale via Continuous Validation

Large data volumes and a diverse range of data types—from unstructured, informal data, to transactional data from several systems—are frequently found in snowflake data warehouses. As a result, Snowflake’s data warehouse makes a wider range of data available for value extraction, demanding a more dynamic approach to maintain data quality as opposed to more conventional inflexible approaches. For instance, Snowflake processes queries and employs independently operating virtual warehouses.

By utilizing data preparation solutions that streamline data cleaning processes, provide knowledge about anomalies and data quality problems, and integrate visual methods with machine learning, the organization may boost overall performance as well as the precision, uniformity, and completeness of the information in a warehouse.

The size of Snowflake data warehouses can be handled by automation, which can also spot data values that seem to be wrong, inaccurate, missing, or mismatched. Additionally, this warehouse enables businesses to employ unorganized datasets with dynamic schemas, which calls for a data preparation solution to make the best possible use of the data.

The organization has to regularly confirm that data quality has been maintained and necessary schema for the downstream analytics is satisfied when increasing volumes of new, varied data are ingested and incorporated into the Snowflake data warehouse.

Continuous validation, a procedure that is essential to agile development methodologies, indicates that users shouldn’t have to wait until the completion of a validation process to see and evaluate results. The business needs a data preparation system that can instantly identify possible data quality problems in massive amounts of data, keep track of data flows, and notify users when fresh data is available for validation.

Automated Data Preparation for Downstream Analytics

Data from a broad variety of sources such as Internet of Things (IoT) devices, mobile phones, cameras, consumer behavior, applications, and more, is retrieved and stored in the Snowflake warehouse in massive and recurring volumes. As the amount of data produced by digital transformation increases, so does the chance for competition based on distinct and value-rich data.

To eliminate duplication and enable widespread access to valuable data, data preparation procedures should be planned, publicized, implemented, and shared. The company must take care of performing native and automatic data preparation within Snowflake’s data warehouse to:

- Increased time to value

- Reduce operating expenses

- Boost oversight and governance

There are fewer duplications and inconsistencies, greater portability, and improved management and control as a result of centralizing the scheduling, publishing, and operationalization of data preparation processes. Centralization enhances the opportunity for reuse among multiple data users who can share information regarding how data needs to be processed for front-end tools, development of machine learning frameworks, visualizations, and reports. This is especially true when combined with interaction with data catalogs.

Conventional extract, transform, and load (ETL) emerged as a method for standardizing data preparation for precisely organized enterprise data warehouses with a rigid and predefined format. Organizations require new options for quickening and automating these procedures with more adaptable and altering downstream schemas when it comes to investigating, organizing, blending, and cleaning enormous volumes of unfamiliar, varied, less-structured data.

Advanced cloud data warehouses, including Snowflake, can function as a data lake with fewer restrictions on structured data and predefined data warehouse schema. If your company concentrates on the appropriate data preparation use cases, Snowflake can bring benefits more quickly.

Selection of right use cases

The majority of businesses are content with reporting use cases using transactional data or other standardized, stable data structures. Starting by attempting to reconstruct what already functions makes little sense. Instead, concentrate on the areas in Snowflake’s data warehouse wherein data scientists and data engineers are having trouble in moving beyond conventional reporting, querying, and visualization methods—for instance, leveraging less structured data to improve and enrich data, such as IOT, data from applications, log data, etc.

It is advisable to focus on the use cases that require a lot of manual preparatory tasks for desktop tools or settings with a lot of code. And also use instances in which business teams depend on IT teams to provide datasets when requirements are subject to frequent change.

Concentrate on exploratory data projects that go beyond the capabilities of typical SQL or ETL and have dynamic data warehousing schemas. You can get the most out of the Snowflake cloud data warehouse by concentrating on these use cases.

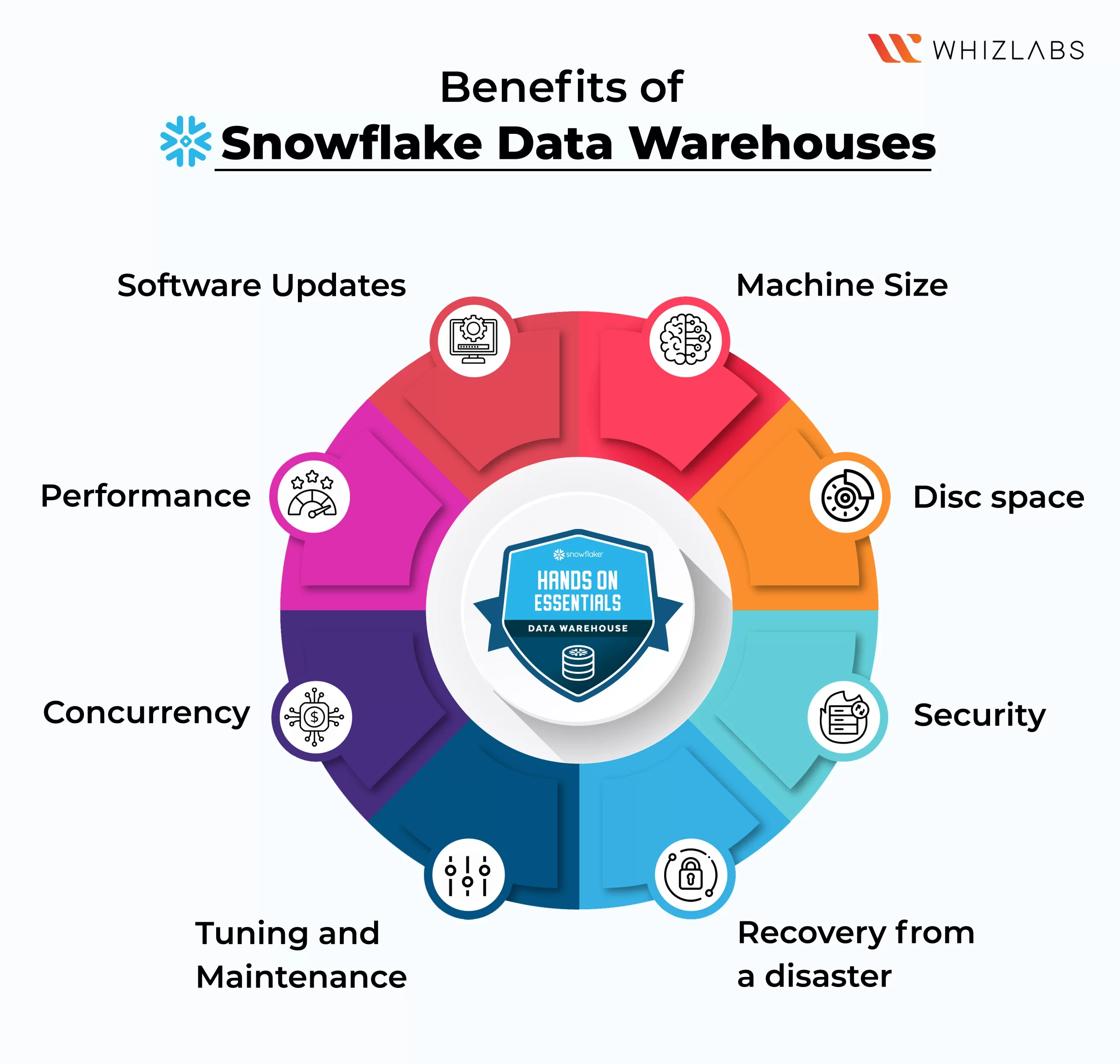

Benefits of Snowflake data warehouses

The benefits of adopting the cloud for data warehousing have been well examined. When compared to conventional on-premises solutions, Snowflake has the following primary benefits:

- Machine Size: The machine size is not a problem anymore. Snowflake may be installed on a single, extra-small cluster and expanded up or down as necessary, unlike standard systems, which often involve deploying a sizable server with the intention of upgrading a few years later.

- Disc space: There is no longer a disc space problem. Because cloud storage is both affordable and essentially limitless in size.

- Security: The system has in-built security and Snowflake offers security features like AES 256 strong end-to-end encryption, IP whitelisting, and multi-factor authentication.

- Recovery from a disaster: Due to automatic data replication over three availability zones, data can continue to function even if one or two data centers get lost.

- Software updates: It is a software service, which often alters to changing operating systems, and the database updates are made quietly and transparently.

- Performance: This will not be a concern as the clusters can be instantly scaled to handle unforeseen enormous data volumes.

- Concurrency: Since each cluster can be set up to automatically grow out to accommodate large numbers of users and thus scale back option is not required.

- Tuning and maintenance: As Snowflake does not support indexes, tuning, and maintenance will not be a major concern. Aside from a few well-documented best practices, the database does not require tuning. There are not many DBA resources needed because the system was designed to be simple.

FAQs

What are the best practices of Snowflake?

Some of the best practices utilized in Snowflake such as:

- Transforming the data incrementally

- Loading the data using COPY or SNOWPIPE

- Usage of multiple data models

- Selection of Virtual Warehouse size

- Retaining raw data history

What are snowflake warehouses?

A Snowflake data warehouse architecture is made up of several databases, each with a specific function. Schemas are included in snowflake databases to further classify the data of each database.

What are the ETL tools used in Snowflake?

Snowflake supports both ETL and ELT (after loading) transformation. Numerous data integration solutions, such as Informatica,Fivetran, Talend, Matillion, and others, are compatible with Snowflake.

Wich cloud platform best suits Snowflake?

Any of the following cloud infrastructures are capable of hosting a Snowflake account:

- AWS, or Amazon Web Services

- Google Cloud Platform

- Azure (Microsoft Azure)

Conclusion

Hope this blog post outlines the best practices to ensure that the Snowflake data warehouse is not only efficient and scalable but also optimized for high-performance analytics. By intelligently organizing your data on the storage layer, you can minimize costs, reduce query execution time, and improve overall efficiency.

And also implementing these best practices, you can unlock the full potential of Snowflake and empower your organization to make data-driven decisions with confidence and agility. To drive more into the snowflake platform, try our hands-on labs and sandboxes.

If you have any queries on this blog post, please feel free to comment us!

- 7 Pro Tips for Managing and Reducing Datadog Costs - June 24, 2024

- Become an NVIDIA Certified Associate in Generative AI and LLMs - June 12, 2024

- What is Azure Data Factory? - June 5, 2024

- An Introduction to Databricks Apache Spark - May 24, 2024

- What is Microsoft Fabric? - May 16, 2024

- Which Kubernetes Certification is Right for You? - April 10, 2024

- Top 5 Topics to Prepare for the CKA Certification Exam - April 8, 2024

- 7 Databricks Certifications: Which One Should I Choose? - April 8, 2024