AWS Solutions Architect certification is offered by Amazon Web Services (AWS) for professionals who want to design and deploy scalable, highly available, and fault-tolerant systems on the AWS platform.

AWS Solutions Architect role requires a deep understanding of AWS services and their integration, as well as experience in designing and implementing complex systems. In an interview for this position, you can expect to be asked a range of technical and non-technical questions to assess your expertise in AWS architecture and your ability to design and implement solutions for complex business problems.

In this blog, we provide you with a comprehensive list of AWS Solutions Architect interview questions and answers to help you prepare for your interview and showcase your knowledge and expertise.

What does AWS Solution Architects do?

AWS Solution Architects will be responsible for designing and implementing scalable, highly available, and fault-tolerant systems on the Amazon Web Services (AWS) platform. They collaborate with clients to comprehend their business wants, pinpoint requirements, and offer architectural advice and suggestions on how to make the greatest use of AWS services to accomplish their objectives.

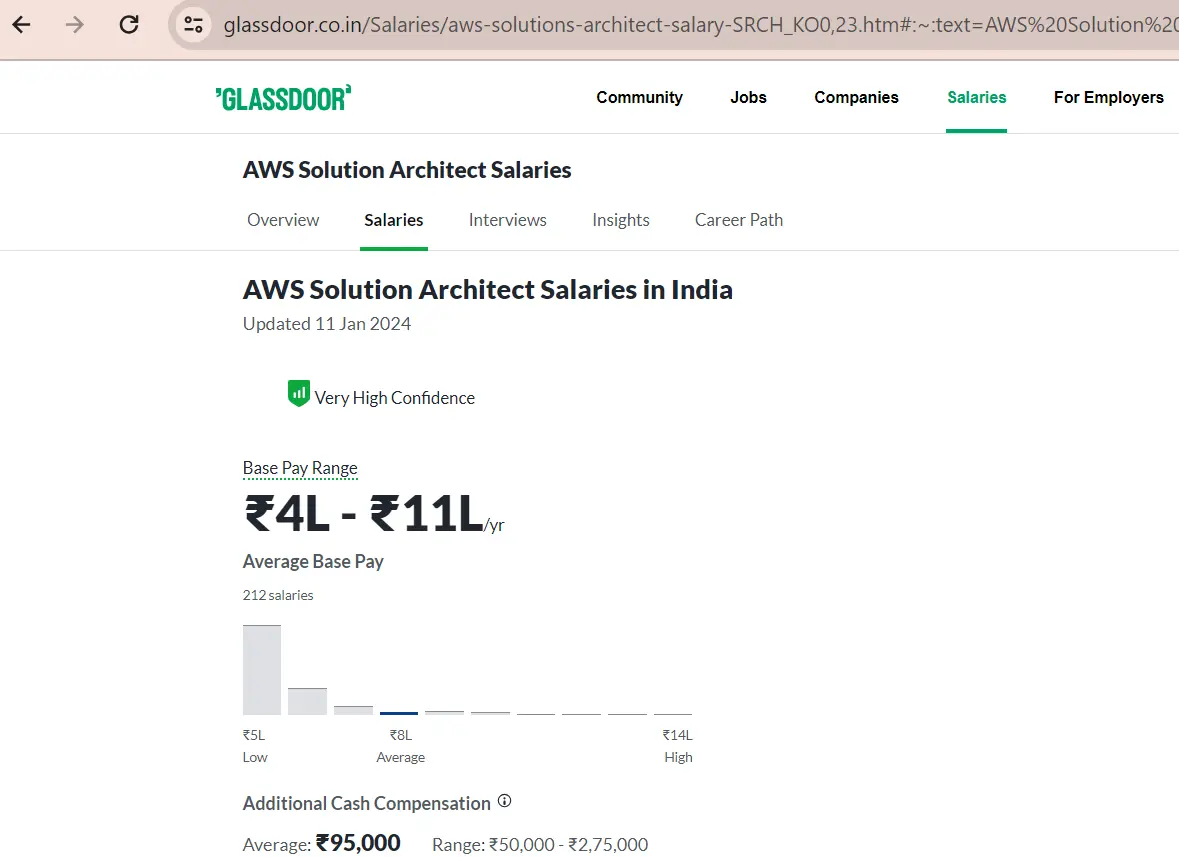

What is the average salary of an AWS Solutions Architect?

Nowadays, AWS professionals are the highest-paid cloud professionals. More companies recruit these experts on the basis of their skill sets and experience. One of the major reasons why they get huge salaries is due to their skills. However, another reason for this will be requirements of these professionals were not enough for them.

As per glassdoor.com, the projected total annual compensation for an AWS Solution Architect is approximately $135,131 in the United States region, showcasing an average yearly salary of $123,678.

AWS Certified Solutions Architects can get an average salary of $116,449 per annum in Australia. By the consideration of several factors, this amount may vary from $86,000 to $137,000 per year. On the basis of experience and location, the salary may get deviate.

AWS Solutions Architect Interview Questions and Answers

1. What is Amazon S3?

Amazon S3 is a cloud-based object storage service offered by Amazon Web Services (AWS). It allows users to store and retrieve large amounts of data, including text, images, videos, and other types of unstructured data, using a simple web interface.

Amazon S3 is designed to be highly scalable, durable, and secure. It provides 99.999999999% (11 nines) durability, meaning that data stored in S3 is highly unlikely to be lost, and it automatically replicates data across multiple locations to ensure high availability and durability.

Also Learn: How to Prepare for AWS Solutions Architect Associate Exam?

Amazon S3 also supports various features such as versioning, access control, encryption, and lifecycle policies, which allow users to manage their data effectively and efficiently. Users can access their data stored in S3 from anywhere on the internet and can integrate S3 with other AWS services or third-party applications.

Overall, Amazon S3 is a reliable and cost-effective solution for storing and managing large amounts of data in the cloud.

2. What is the difference between SAA C02 and SAA C03?

SAA C02 and SAA C03 are two versions of the AWS Certified Solutions Architect – Associate certification. The main difference between these two versions is the content and the topics covered in the certification exam.

The SAA C02 exam introduced in 2020 and it covers the foundational concepts and best practices related to AWS services and solutions. It includes topics such as AWS architecture, compute, networking, storage, databases, security, and cost optimization.

The SAA C03 exam was launched in 2022 and updates and expands on the topics covered in the SAA C02 exam. It includes new and updated content related to the latest AWS services, architectures, and best practices, such as serverless computing, containers, machine learning, security, and governance. It also emphasizes the importance of sustainability, ethics, and compliance in AWS solutions.

Here is the detailed comparison between SAA C02 vs. SAA C03.

3. Define the term CloudFront.

Amazon CloudFront is a popular content delivery network (CDN) that helps to accelerate the delivery of static and dynamic web content, including HTML, CSS, JavaScript, images, and videos, to users around the world.

One of the key advantages of using Amazon CloudFront is its ability to integrate with other AWS services, such as Amazon S3, Elastic Load Balancing, and Amazon EC2. This allows you to easily serve your content from a variety of sources, depending on your specific needs.

In addition, Amazon CloudFront includes features like AWS Shield, which provides protection against DDoS attacks, as well as Lambda@Edge, which enables you to run custom code closer to your users and personalize content based on their location, device, or other factors.

Overall, Amazon CloudFront is a powerful CDN that provides fast, reliable content delivery to users around the world, while also offering a range of features and integrations to help you optimize and secure your content delivery.

4. What are the different types of load balancers in EC2?

Amazon EC2 provides three types of load balancers:

Application Load Balancer (ALB): It operates at the application layer (Layer 7) of the OSI model and provides advanced routing capabilities based on content-based routing, URL routing, and host-based routing. It also supports WebSocket and HTTP/2 traffic and can be used to route traffic to containerized applications.

Network Load Balancer (NLB): The NLB operates at the transport layer (Layer 4) of the OSI model and provides high-performance, low-latency traffic management of TCP and UDP traffic. It is designed to handle millions of requests per second and can be used to route traffic to instances, containers, or IP addresses.

Classic Load Balancer (CLB): As the name suggests, the CLB was the first load balancer offered by Amazon EC2. It provides basic load balancing capabilities and operates at both the application layer (Layer 7) and the transport layer (Layer 4) of the OSI model. It is mainly used for applications built within the EC2-Classic network.

5. What are the main differences between ‘horizontal’ and ‘vertical’ scales?

| Parameter | Horizontal Scaling | Vertical Scaling |

| Definition | Adding more instances or machines to handle increased load | Increasing resources of a single instance or machine |

| Cost | More cost-effective since adding instances is usually less expensive than upgrading a single machine | More expensive since upgrading a single machine can be costly |

| Complexity | More complex since it involves managing multiple instances, distributing workload, and coordinating communication | Simpler since it involves upgrading a single instance, but may require expertise in hardware or VM configuration |

| Availability | Better availability since workload can be rerouted to other instances if one fails | May have a single point of failure if the upgraded machine fails |

| Performance | Can provide better performance by distributing workload across multiple instances | Can provide better performance in situations where a single instance has high resource requirements |

6. What is AWS Lambda?

AWS Lambda is a serverless computing service provided by Amazon Web Services (AWS). It allows developers to run code without the need for managing servers or infrastructure. With Lambda, developers can simply upload their code and let AWS handle the rest, including scaling, capacity planning, and maintaining the underlying infrastructure.

Lambda supports several programming languages, including Node.js, Python, Java, Go, and more. Developers can create serverless applications that respond to events, such as API requests, file uploads, database changes, and more.

These applications can be deployed as standalone functions or as part of a larger serverless architecture, using other AWS services such as API Gateway, Amazon S3, DynamoDB, and others.

7. Explain the pros of Disaster Recovery (DR) solution in AWS.

Following are the benefits of AWS’s Disaster Recovery (DR) solution:

Automated recovery: AWS DR solution provides automated recovery options, enabling organizations to quickly and easily recover their critical applications and data in the event of a disaster.

Global coverage: AWS offers global coverage with its presence in multiple regions and availability zones, allowing organizations to deploy their DR solutions across multiple geographic locations to ensure business continuity.

High durability and availability: AWS offers high durability and availability for storage services like Amazon S3, Glacier, and EBS, ensuring that data is safe and available in the event of a disaster.

Flexible recovery options: AWS DR solution offers flexible recovery options, including pilot light, warm standby, and multi-site active-active, giving organizations the ability to choose the recovery strategy that best fits their needs.

Enhanced security: AWS offers a range of security features to help protect data and resources, including encryption, access control, and security monitoring.

8. What is DynamoDB?

DynamoDB is a fully managed NoSQL database service offered by Amazon Web Services (AWS). It is designed to provide low-latency and high-performance access to data, making it suitable for applications that require real-time data updates and rapid scalability.

DynamoDB is a document-oriented database that uses a key-value data model. It provides fast and predictable performance, automatic scaling of throughput capacity, and low operational overhead. It also supports document and key-value data models, making it easy to store and retrieve structured and semi-structured data.

9. What are the advantages of using AWS CloudFormation?

AWS CloudFormation is a service that allows users to define and deploy infrastructure as code. Here are some of the advantages of using AWS CloudFormation:

- Automation: AWS CloudFormation allows users to automate the deployment of infrastructure, including EC2 instances, load balancers, and databases, among others. This makes it easier to deploy and manage complex infrastructure, and helps reduce the risk of human error.

- Infrastructure as code: With CloudFormation, infrastructure can be defined and managed as code, which means that changes can be made quickly and easily, and version controlled.

- Consistency: With CloudFormation, infrastructure is deployed in a consistent manner, ensuring that all resources are configured correctly and are up to date.

- Reusability: CloudFormation templates can be reused across multiple deployments, saving time and effort.

- Scalability: CloudFormation templates can be used to deploy highly scalable infrastructure, allowing users to easily scale up or down depending on the needs of the application.

- Flexibility: CloudFormation templates are highly customizable, allowing users to define and deploy infrastructure in a way that meets their specific needs.

- Cost-effective: By defining infrastructure as code, users can reduce the risk of overprovisioning resources, which can result in cost savings.

Overall, AWS CloudFormation simplifies the deployment and management of infrastructure, reduces the risk of human error, and provides a consistent, version-controlled way to deploy infrastructure. It also provides scalability, flexibility, and cost savings.

10. What are the advantages of using AWS CloudFormation?

AWS CloudFormation is a service provided by Amazon Web Services that allows users to define and deploy infrastructure as code. There are several advantages to using AWS CloudFormation:

Automation: AWS CloudFormation provides automation for infrastructure deployment, allowing users to define the infrastructure resources and their configuration in code. This eliminates the need for manual setup and configuration of resources, which can be time-consuming and error-prone.

Consistency: CloudFormation ensures that the infrastructure resources are deployed in a consistent manner, which reduces the risk of misconfiguration and errors that can lead to downtime.

Scalability: CloudFormation allows users to easily scale their infrastructure up or down as needed. This is particularly useful for applications that experience spikes in traffic or usage.

Cost-effective: CloudFormation enables users to provision only the resources they need, reducing costs associated with over-provisioning.

Version control: With CloudFormation, infrastructure is defined as code, allowing for version control and the ability to roll back to previous versions if necessary.

Easy updates: CloudFormation makes it easy to update and modify existing infrastructure resources without having to manually make changes to each resource.

Cross-region deployment: CloudFormation allows for deployment of infrastructure resources across multiple AWS regions, making it possible to build applications that are highly available and fault-tolerant.

11. What is Elastic Beanstalk?

Elastic Beanstalk is a fully managed service provided by Amazon Web Services (AWS) that makes it easy to deploy, manage, and scale web applications and services. It is a platform-as-a-service (PaaS) offering that abstracts the underlying infrastructure and allows developers to focus on writing code.

With Elastic Beanstalk, developers can simply upload their code and Elastic Beanstalk will handle the rest, including provisioning the required infrastructure (such as Amazon EC2 instances, load balancers, and databases), deploying the code, and monitoring and scaling the application.

12.What is a Serverless application in AWS?

A serverless application in AWS is an application that is designed to run without the need for servers or server management. In a serverless architecture, the cloud provider manages the infrastructure and automatically scales resources up and down based on demand. AWS offers a serverless computing service called AWS Lambda that allows developers to run their code without managing servers.

In a serverless application, the developer writes code and deploys it to a cloud service provider like AWS Lambda. The code is then triggered by an event, such as a request to an API Gateway or a change in a database, and runs in a container for a short period of time. Once the code has completed its execution, the container is destroyed. This means that the developer only pays for the compute time that their code uses, rather than paying for a fixed amount of server capacity.

13. What is meant by Sharding?

Sharding is a technique used in database management to horizontally partition data across multiple servers or nodes. In sharding, the database is split into smaller logical components called shards, which are distributed across different physical servers or nodes. Each shard contains a subset of the data in the database.

The primary goal of sharding is to improve database performance and scalability by distributing the workload across multiple servers. By partitioning the data, the system can process data more quickly, and can handle larger data volumes than a single server can.

14. Define the term “Redshift”?

Amazon Redshift is a fully managed data warehousing service provided by Amazon Web Services (AWS). It is a cloud-based, column-oriented relational database that is optimized for large-scale data analytics workloads. Redshift is designed to handle petabyte-scale data warehouses with ease and provides high performance and scalability, making it a popular choice for large organizations and data-driven businesses.

15. What is ‘SQS’?

Amazon Simple Queue Service (SQS) is a fully managed message queuing service provided by Amazon Web Services (AWS). SQS enables decoupling of components in a distributed system by allowing one component to send a message to another component without having to know the details of how the receiving component works.

SQS provides a reliable, highly scalable, and flexible way to transmit messages between different components of a distributed application or system. With SQS, messages are stored in a queue until the receiving component retrieves and processes them. This approach allows components to operate independently and asynchronously, providing greater flexibility and scalability.

16. Define AWS OpsWorks.

AWS OpsWorks is a configuration management service provided by Amazon Web Services (AWS) that helps developers automate the deployment, configuration, and management of applications on Amazon Elastic Compute Cloud (EC2) instances or on-premises servers.

OpsWorks provides a flexible and scalable way to manage infrastructure and applications using a variety of automation tools and workflows. It supports both Chef and Puppet, two popular open-source configuration management tools, and provides a number of pre-built configurations, called stacks, for popular application architectures such as LAMP (Linux, Apache, MySQL, PHP) and Rails.

OpsWorks allows developers to manage and automate the entire lifecycle of an application, from provisioning and deploying infrastructure, to configuring and managing application components, to monitoring and scaling the application as needed.

17. What is AWS Auto Scaling?

AWS Auto Scaling is a service provided by Amazon Web Services (AWS) that automatically adjusts the capacity of EC2 instances, ECS tasks, and other AWS resources to maintain performance and optimize costs.

Auto Scaling helps ensure that applications are able to handle fluctuations in demand without manual intervention, by automatically scaling up or down the number of instances in response to changes in workload. It works by using predefined policies to monitor application performance and automatically add or remove instances as needed to maintain performance.

18. Brief Geo Restriction term in CloudFront?

Geo Restriction is a feature provided by Amazon CloudFront, the content delivery network (CDN) service of Amazon Web Services (AWS), that allows you to control access to content based on the geographic location of the user.

With Geo Restriction, you can block or allow access to content based on the geographic location of the user’s IP address. This can be useful for compliance reasons, to comply with content licensing requirements or to protect against unauthorized access to content.

Geo Restriction supports two types of restrictions: whitelist and blacklist. Whitelist restricts access to content to specific geographic locations, while blacklist blocks access to content from specific geographic locations.

19. Explain the usage of buffers in AWS.

In AWS, a buffer is a temporary storage area used to store data temporarily as it is being moved from one system to another. Buffers are commonly used in AWS to manage data transfer between different services or components of a distributed system, such as between an application and a database, or between different AWS services.

The use of a buffer helps to ensure that data is transferred efficiently and without loss or corruption. It allows for the efficient handling of large volumes of data by temporarily holding data in a queue, allowing other processes or components to continue to operate without being overwhelmed by the data flow.

20. Is it advised to use encryption techniques for S3?

Yes, it is generally recommended to use encryption for S3, particularly for sensitive or confidential data. Amazon S3 provides several options for encrypting data stored in S3 buckets, including server-side encryption and client-side encryption.

21. What is AWS VPC?

Amazon Virtual Private Cloud (VPC) empowers users to establish a secluded, private segment within the AWS cloud. This VPC is logically separated from other virtual networks in the AWS cloud. Users have authority over the virtual networking environment, which encompasses tasks such as choosing the IP address range, forming subnets, and setting up route tables and network gateways. These VPCs are situated in distinct regions and maintain logical isolation from other VPCs within the same geographical area.

Conclusion

Hope this article provides you with AWS Solutions Architect interview questions and answers that may be posed during your upcoming interviews. To obtain this AWS Solutions Architect certification, having subjective knowledge alone is not enough. It is essential to show that you know how to work within AWS platform and its application.

To achieve that practical skills, try to utilise more hands-on labs and sandboxes to make you familiar with the AWS and get hired by top companies.

If you have any queries, please feel free to comment us!

- Study Guide DP-600 : Implementing Analytics Solutions Using Microsoft Fabric Certification Exam - June 14, 2024

- Top 15 Azure Data Factory Interview Questions & Answers - June 5, 2024

- Top Data Science Interview Questions and Answers (2024) - May 30, 2024

- What is a Kubernetes Cluster? - May 22, 2024

- Skyrocket Your IT Career with These Top Cloud Certifications - March 29, 2024

- What are the Roles and Responsibilities of an AWS Sysops Administrator? - March 28, 2024

- How to Create Azure Network Security Groups? - March 15, 2024

- What is the difference between Cloud Dataproc and Cloud Dataflow? - March 13, 2024