In this blog, you will learn more about AWS Lambda, a powerful serverless computing service that empowers AI inference and model execution without infrastructure management, and a major service used by AWS Certified AI Practitioners. Read through to know more about how it supports AI inference and model executions, serverless architecture, model deployment strategies, scalable AI workloads, and others, with use cases for better understanding.

Serverless AI Model Execution

AWS Lambda enables serverless AI inference by eliminating infrastructure management while providing auto-scaling, cost efficiency, and seamless integration with AWS AI/ML services. Below is an in-depth look at how AWS Lambda supports AI inference and model execution.

AWS Lambda helps eliminate server management, thereby providing seamless AI inference. With no infrastructure management required, developers can focus entirely on AI logic rather than server provisioning. Through AWS, Lambda AI models can be deployed as functions, significantly reducing operational costs.

Its auto-scaling capabilities optimize performance by ensuring the automatic adjustment of resources based on request load. Additionally, Lambda’s cost-efficient model charges only for time resources executed, making it a pocket-friendly solution. By enabling real-time inference, AI models can process data instantly and deliver insights without any need for dedicated infrastructure.

Use Case

Business Problem

An e-commerce platform needs a scalable and cost-efficient solution to auto-classify product images uploaded. The main goal is to improve product categorization, enhance search accuracy, and also offer personalized product recommendations.

Solution Overview

AWS Lambda is used to execute an AI-based image classification model in a serverless manner, eliminating the need for dedicated infrastructure.

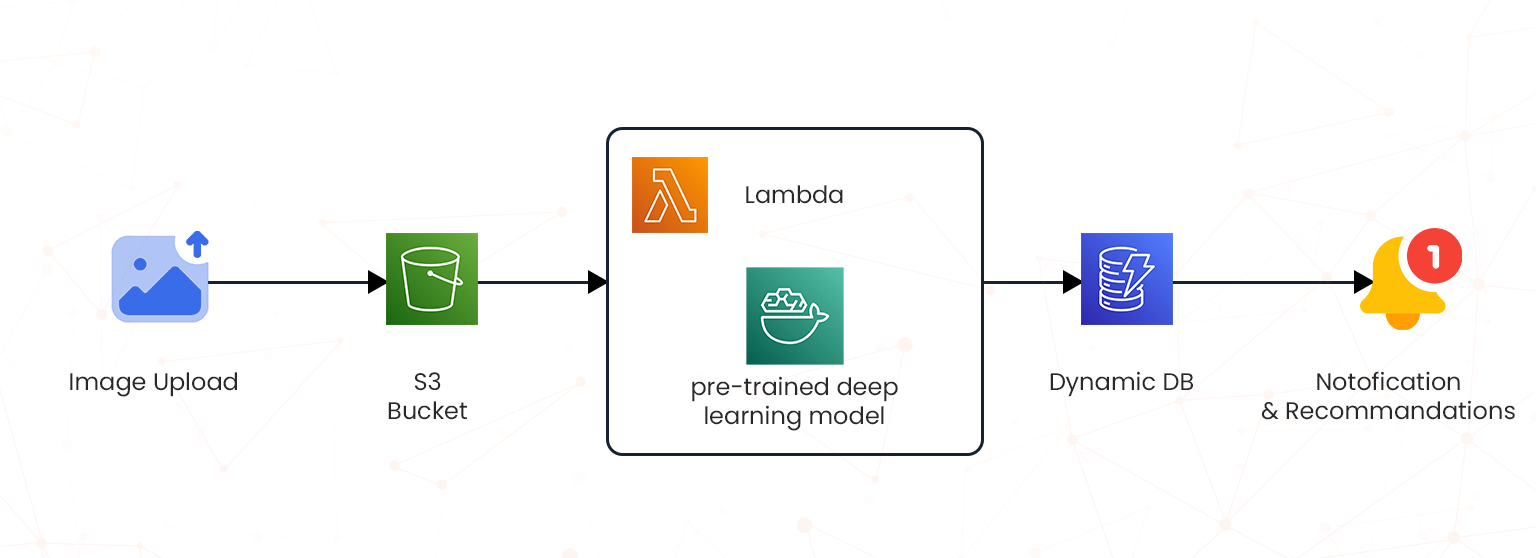

Architecture Flow

- Image Upload: A seller uploads a product image to an Amazon S3 bucket.

- Event Trigger: The S3 upload event triggers an AWS Lambda function.

- AI Model Execution: The Lambda function loads a pre-trained deep learning model to classify the image.

- Category Assignment: The predicted category (e.g., “Electronics > Smartphones”) is stored in Amazon DynamoDB.

- Notification & Recommendations: The platform updates the product listing and suggests similar products to buyers.

AWS Services Used

- AWS Lambda – Executes the AI model without managing servers.

- Amazon S3 – Stores uploaded product images.

- Amazon DynamoDB – Stores classification results.

- Amazon API Gateway – Facilitates real-time API calls for product categorization.

- AWS Step Functions (Optional) – Manages workflow if additional processing is needed.

Key Benefits

- No Infrastructure Management – Focus on AI model logic rather than server provisioning.

- Auto-Scaling – Handles fluctuating image upload volumes efficiently.

- Cost-Effective – Pay only for execution time, reducing operational expenses.

- Real-Time Inference – Ensures immediate product categorization and user experience enhancement.

By leveraging AWS Lambda for serverless AI inference, the e-commerce platform achieves real-time image classification, optimizes operational costs, and enhances the overall shopping experience.

AWS Machine Learning Services Integration

AWS Lambda integrates seamlessly to enhance AI workloads with AWS AI & ML services significantly. You can also do AWS AI & ML certification to gain more knowledge. Through Amazon SageMaker, you can fully manage a machine learning service that offers model training and various inference competencies. AWS Inferentia is specialized AI inference hardware designed for optimized performance.

AWS Fargate ensures efficient resource management by enabling the execution of containerized AI models with serverless scaling. Amazon API Gateway allows seamless integration with external applications by facilitating the exposure of AI-powered APIs. AWS Step Functions streamline complex processes by orchestrating multi-step AI workflows. Additionally, AWS EventBridge, by enabling real-time data, ensures timely and automated responses to events by enhancing event-driven AI inference.

Use Case

Business Problem

A financial services company needs a real-time fraud detection system implementation to analyze and detect fraudulent transactions instantly. The system should be scalable, serverless, and integrated with multiple AWS AI/ML services for optimal performance.

Solution Overview

AWS Lambda serves as the central execution layer, integrating with various AWS AI/ML services to provide real-time fraud detection.

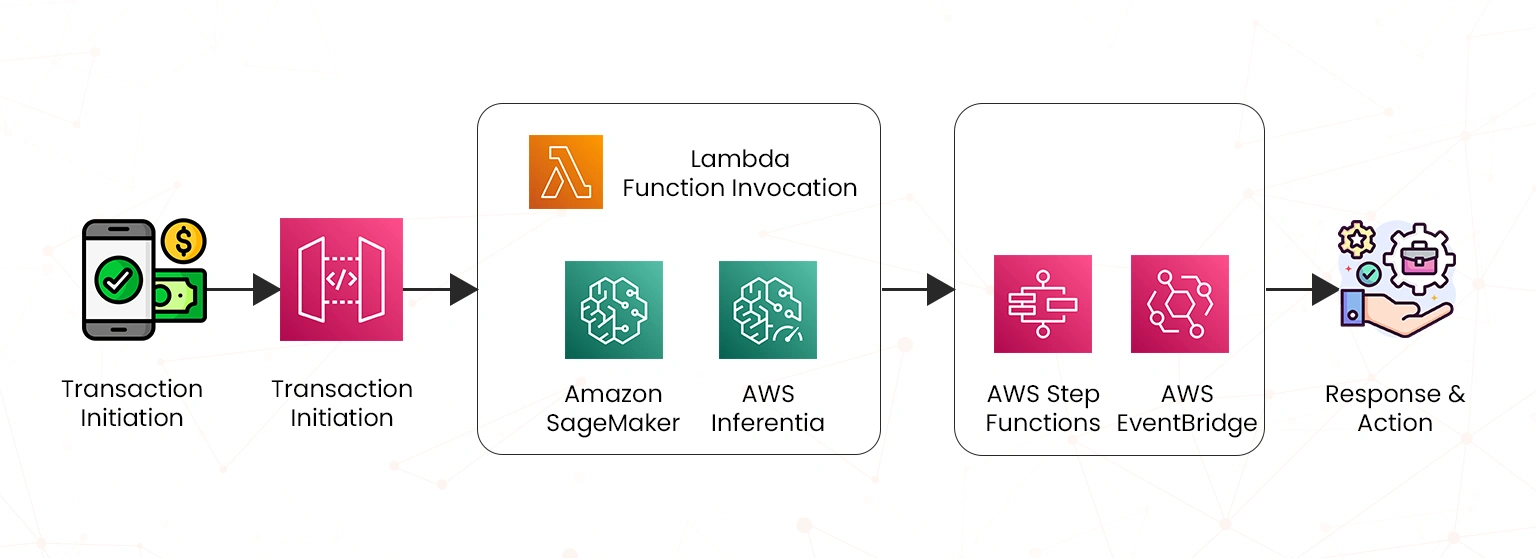

Architecture Flow

- Transaction Initiation:

- A user initiates a transaction via a mobile app or web platform.

- The transaction request is sent to Amazon API Gateway.

- Lambda Function Invocation:

- API Gateway triggers an AWS Lambda function to process the transaction.

- Fraud Detection Using AWS AI/ML Services:

- Amazon SageMaker: Runs a pre-trained fraud detection model to organize the transaction as legitimate or fraudulent.

- AWS Inferentia: If deep learning inference is mandatory, the request is processed on Inferentia-powered instances for high-speed predictions.

- Decision Workflow & Event Handling:

- AWS Step Functions: Coordinates multiple ML models if additional verification steps are needed (e.g., user risk scoring, historical data analysis).

- AWS EventBridge: Triggers alerts and notifications if a suspicious transaction is detected.

- Response & Action:

- If the transaction is legitimate, it proceeds as usual.

- If flagged as fraudulent, additional verification (e.g., OTP confirmation) is required.

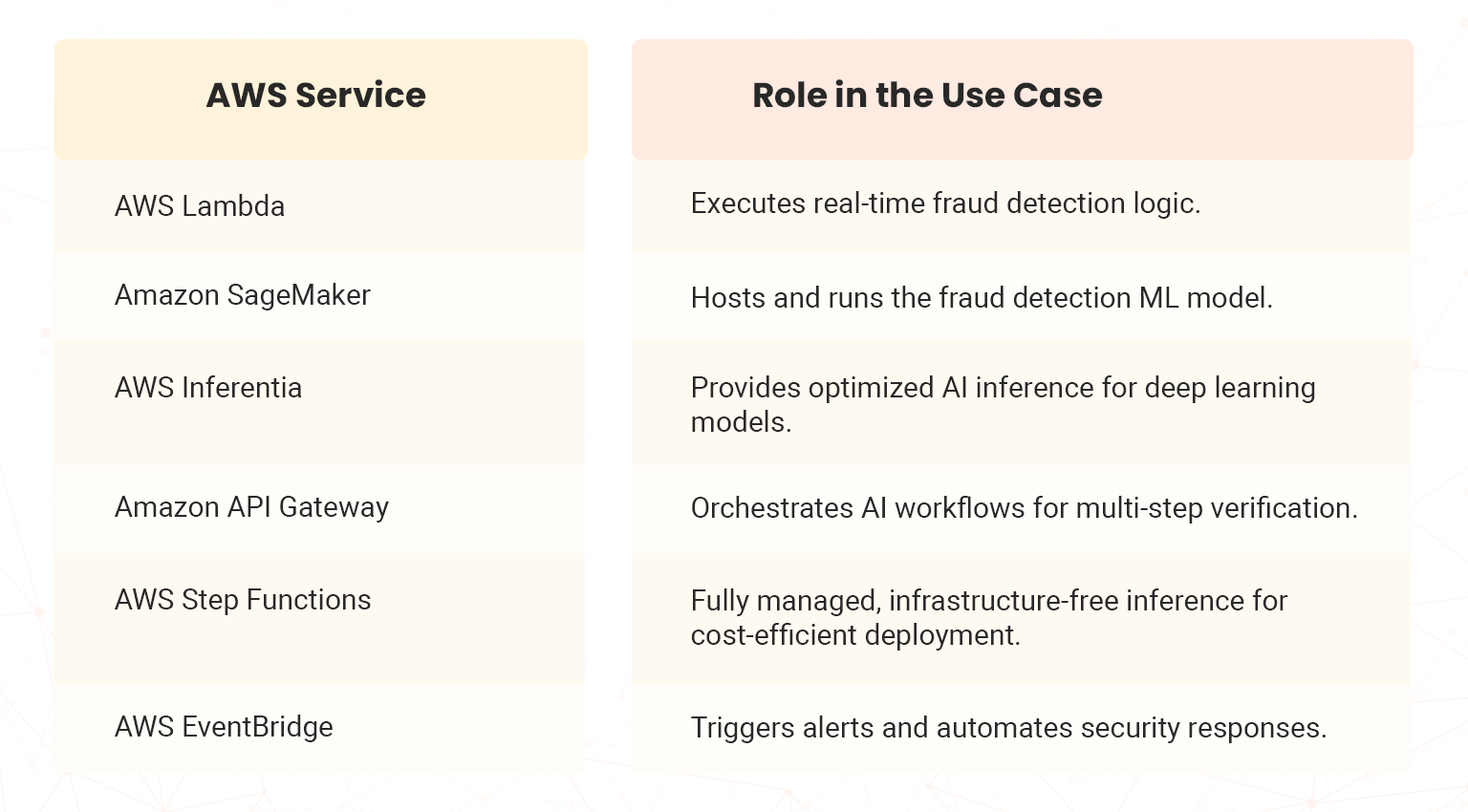

AWS Services Used

Key Benefits

- Seamless AI/ML Integration – Lambda easily connects with AWS AI/ML services for enhanced fraud detection.

- Auto-Scaling & Serverless – No need to provision servers, ensuring cost-efficient execution.

- Low Latency Predictions – Fast AI inference using SageMaker & Inferentia.

- Event-Driven Architecture – Real-time fraud alerts using EventBridge.

- Modular & Extensible – Integrated additional AI models for improved accuracy.

AWS Lambda with AI/ML services like SageMaker, Inferentia, and EventBridge, contributes in achieving real-time fraud detection in financial companies. This reduces financial risks while ensuring a seamless user experience.

How To Deploy AI Models on AWS Lambda?

AWS Lambda Layers enable efficient packaging and reuse of AI models and dependencies, optimizing resource management. Amazon S3 provides secure storage for AI model deployments, ensuring easy access and version control. By managing Lambda functions effectively, AWS Step Functions eases the orchestration of complex AI workflows.

For parallel execution, large AI models can be broken down into small modular Lambda functions that increase performance and enhance scalability. AWS Lambda versioning reduces deployment risks by ensuring seamless model updates. Additionally, automated deployments improve operational efficiency, and this is achieved by employing AWS CodePipeline, streamlining AI model updates, and integrating seamlessly with CI/CD processes or pipelines.

Use Case

Business Problem

A fintech company wants to deploy an AI-powered credit risk assessment model on AWS Lambda to evaluate loan applications in real time without managing infrastructure.

Solution Overview

The AI model is deployed on AWS Lambda using efficient packaging, versioning, and automated CI/CD strategies.

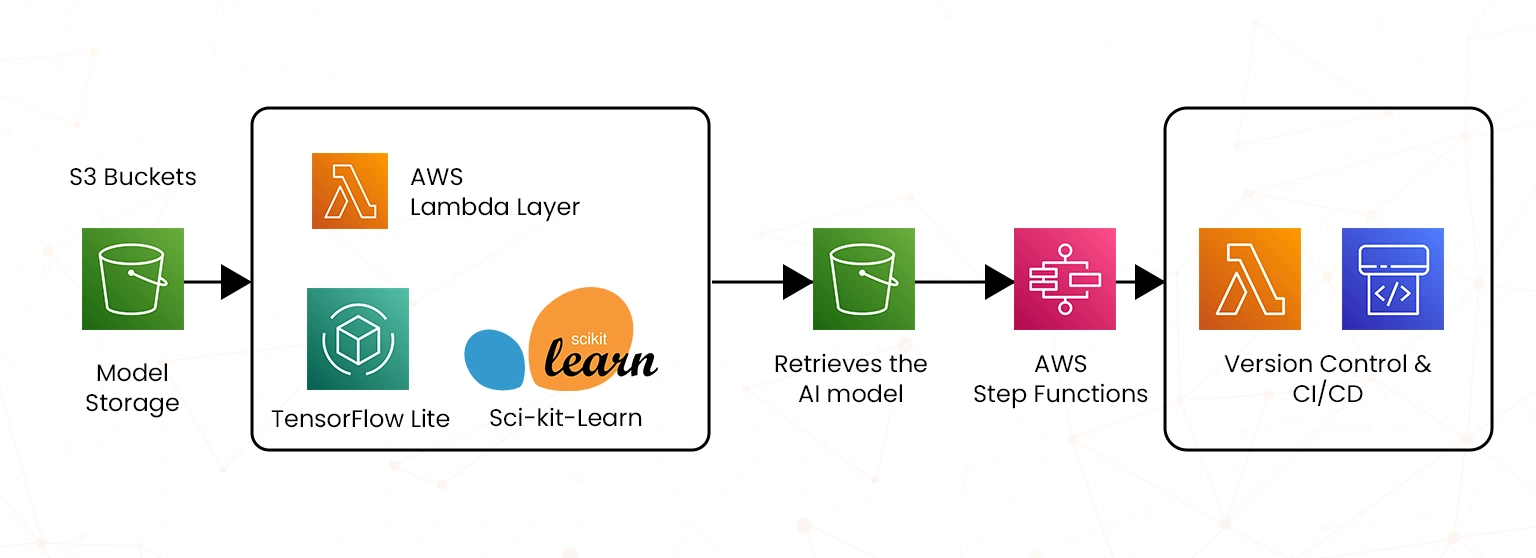

Architecture Flow

- Model Storage:

- The trained AI model is stored in Amazon S3 for easy retrieval and version control.

- Lambda Layers:

- The AI model and dependencies (e.g., TensorFlow Lite, sci-kit-learn) are packaged as an AWS Lambda Layer for reuse across multiple functions.

- Inference Execution:

- Lambda retrieves the AI model from S3 and executes the risk assessment.

- Workflow Management:

- AWS Step Functions orchestrate additional checks (e.g., transaction history analysis).

- Version Control & CI/CD:

- AWS Lambda Versioning & Aliases ensure smooth model updates.

- AWS CodePipeline automates the deployment of new model versions.

Key Benefits

- Efficient packaging with Lambda Layers.

- Seamless model updates with versioning & aliases.

- Automated deployment for continuous improvement.

Inference Optimization with AWS Lambda

To optimize AI inference performance on AWS, several strategies can be implemented. Cold start reduction can be achieved by utilizing provisioned concurrency, ensuring functions remain warm, and minimizing latency. Memory and CPU resources should be appropriately allocated to strike a balance between cost and performance. For high-speed AI inference, AWS Inferentia and GPU instances provide efficient acceleration. Implementing caching strategies with Amazon ElastiCache helps store frequently accessed inference results, reducing response times. Parallel execution can be facilitated using AWS Step Functions, allowing multiple inference requests to be processed simultaneously. Additionally, batch processing with AWS Batch optimizes workloads by grouping inference requests, improving efficiency.

Use Case

Business Problem

A healthcare provider uses an AI-based medical image analysis system and needs low-latency inference for real-time diagnosis.

Solution Overview

AWS Lambda is optimized for fast AI inference with caching, parallel execution, and resource tuning.

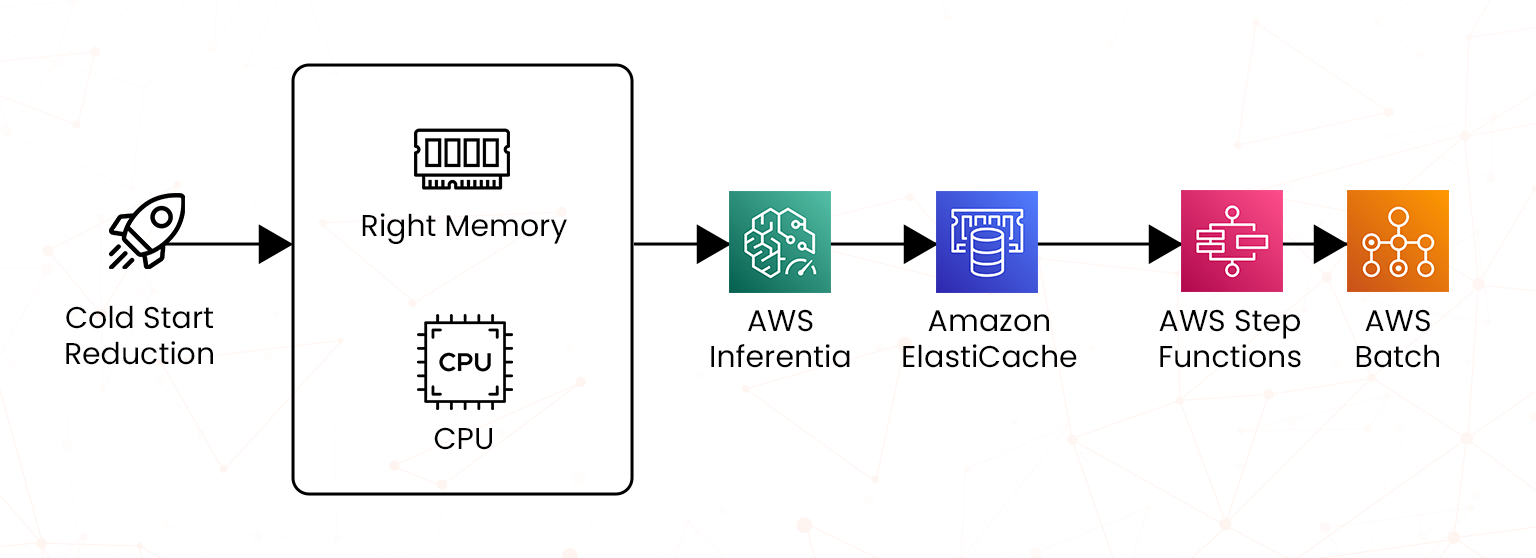

Architecture Flow

- Cold Start Reduction:

- Provisioned Concurrency in Lambda ensures the functions are always ready to process requests.

- Memory & CPU Optimization:

- Lambda functions are fine-tuned with the right memory and CPU allocation for optimal performance.

- GPU Acceleration:

- AI models leverage AWS Inferentia and GPU-based inference when needed.

- Caching Strategy:

- Frequently accessed AI results are stored in Amazon ElastiCache to reduce redundant processing.

- Parallel Execution & Batch Processing:

- AWS Step Functions enable concurrent processing of multiple image files.

- AWS Batch groups inference requests to improve efficiency.

Key Benefits

- Low latency with cold start reduction.

- Optimized performance via caching & resource tuning.

- Scalable parallel execution for high-volume workloads.

AWS Lambda for Deep Learning Models

AWS Lambda supports lightweight deep learning frameworks for AI applications, enabling efficient deployment and execution. As we are already aware, one needs to know how deep learning models work for the AWS Certified AI Practitioner Certification (AIF-C01) exam. Here are a few more using AWS Lambda. It allows the use of TensorFlow Lite on ONNX to deploy lightweight deep learning models effectively. Amazon S3 stores large deep-learning models and aids in quick retrieval and processing.

Additionally, Amazon DynamoDB can also be leveraged to store AI inference results, ensuring data integrity at the same time. By seamlessly connecting with Lambda, AWS Glue integration enables the deployment of serverless AI pipelines. Furthermore, Lambda’s event-driven capabilities support real-time natural language processing (NLP) and vision-based AI applications, which enables it to be a powerful solution for AI-driven capabilities.

Use Case

Business Problem

An e-commerce platform needs an AI-driven product recommendation system using deep learning.

Solution Overview

AWS Lambda serves lightweight deep-learning models for personalized recommendations.

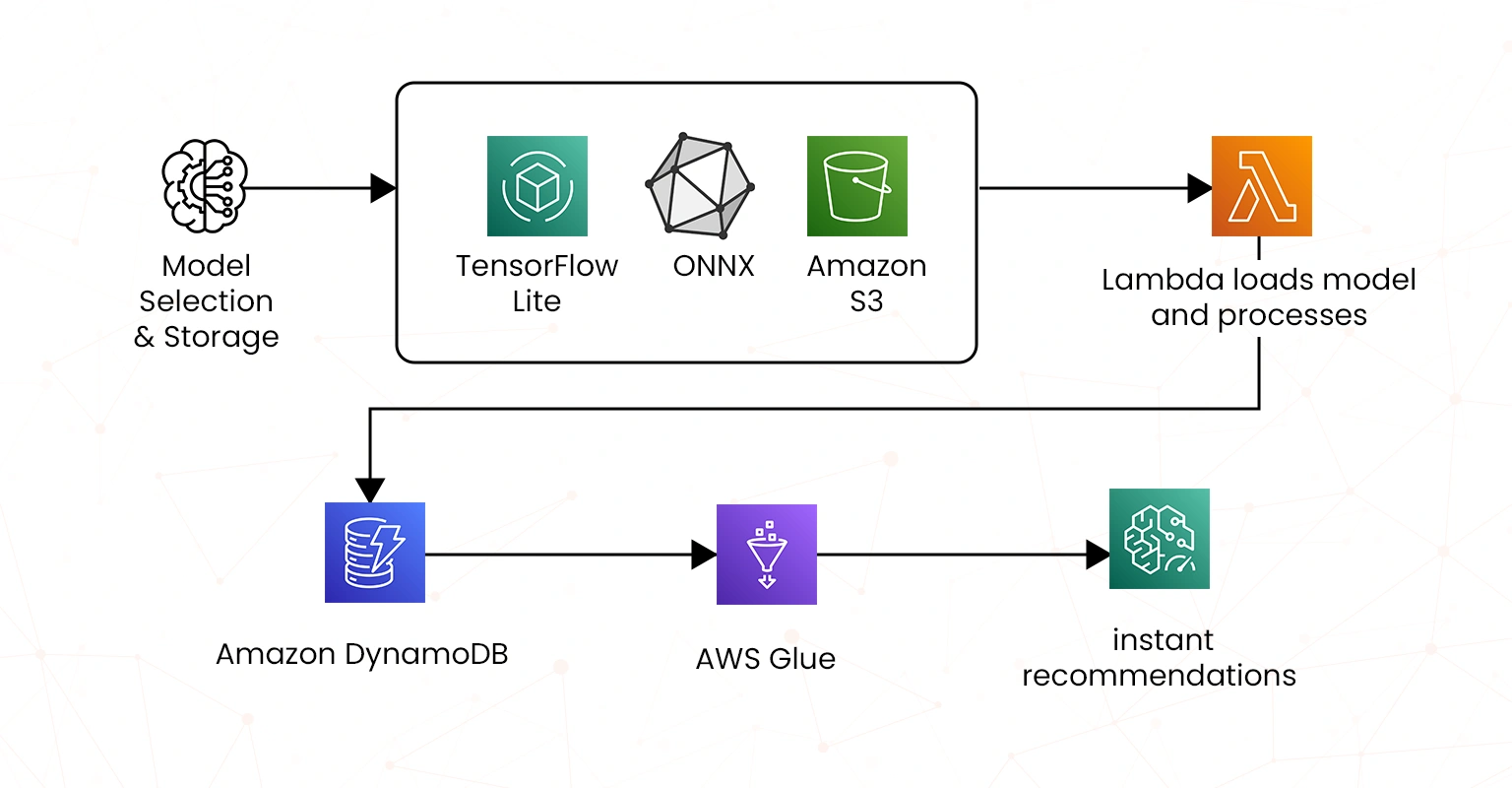

Architecture Flow

- Model Selection & Storage:

- Deep learning models are converted into TensorFlow Lite and ONNX formats for lightweight execution.

- Models are stored in Amazon S3 for quick access.

- AI Inference Execution:

- Lambda loads the model and processes user browsing behavior for recommendations.

- Database Integration:

- Predicted recommendations are stored in Amazon DynamoDB.

- Data Pipeline Management:

- AWS Glue integrates with Lambda to process and clean historical user behavior data.

- Real-Time Processing:

- Lambda analyzes real-time user activity for instant recommendations.

Key Benefits

- Lightweight deep learning execution with TensorFlow Lite & ONNX.

- Seamless storage & retrieval via Amazon S3 & DynamoDB.

- Real-time AI-driven personalization for better user experience.

Scalable AI Workloads on AWS

AWS Lambda ensures AI workloads can scale dynamically based on demand by leveraging various AWS services and best practices. It enables auto-scaling and auto-adjustments of resources and ensures high performance in real-time, based on the received request load. To implement any event-driven AI processing, Lambda can be integrated with Amazon Kinesis and Amazon SNS to handle real-time AI events smoothly.

It also supports batch inference processing by utilizing AWS Batch and Step Functions to manage large-scale AI inference actions. To ensure maximum availability, Lambda facilitates multi-region deployment by permitting AI inference models to be deployed across numerous AWS regions. Additionally, performance monitoring is enhanced through AWS CloudWatch, which helps track AI workload performance and optimize execution. Finally, secure AI inference is maintained using AWS IAM roles and VPC configurations, enforcing security best practices and protecting delicate data.

Use Case

Business Problem

A social media platform wants to implement real-time content moderation at scale using AI.

Solution Overview

AWS Lambda enables scalable AI moderation using event-driven architectures and multi-region deployment.

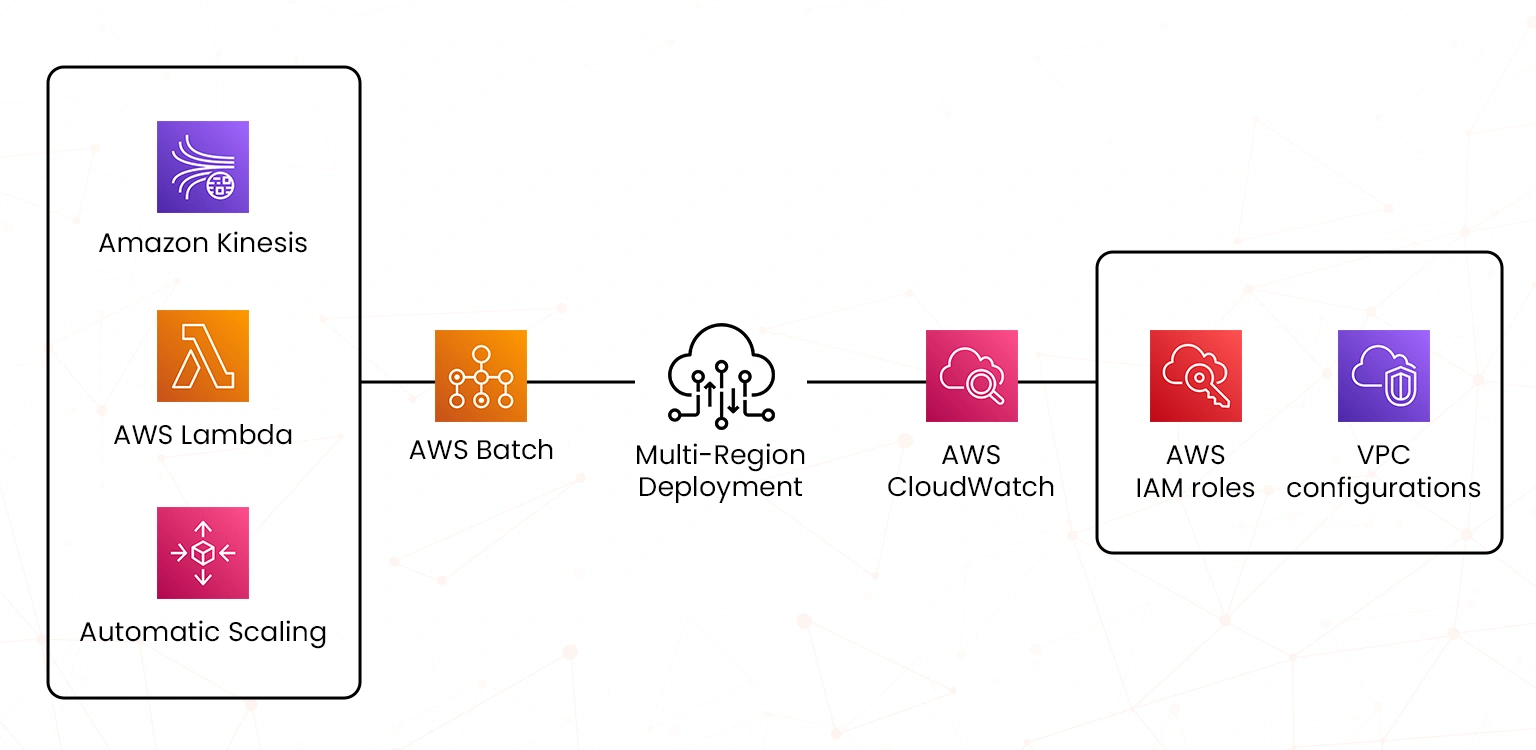

Architecture Flow

- Event-Driven AI Processing:

- Amazon Kinesis streams user-generated content (text, images, videos) for moderation.

- AWS Lambda processes real time data.

- Automatic Scaling in Lambda, dynamically scales based on incoming content volume.

- Batch Inference Processing:

- AWS Batch groups moderation tasks to optimize resource use.

- Multi-Region Deployment:

- AI inference models are deployed across multiple AWS regions for high availability.

- Monitoring & Security:

- AWS CloudWatch tracks AI performance and execution times.

- AWS IAM roles & VPC configurations secure AI inference.

Key Benefits

- Highly scalable AI moderation with automatic scaling.

- Event-driven inference for real-time content analysis.

- Secure & reliable AI execution across multiple regions.

Best Practices for Deploying AI on AWS Lambda

You should be familiar with the best practices to clearly and easily take up the AWS Certified AI Practitioner Certification (AIF-C01) exam. So to maximize the AI inference efficiency and model execution on AWS Lambda, below are the best practices to be followed.

- Model compression techniques like quantization, reduce the AI model’s size thereby increasing the speed.

- Selecting an optimal execution environment is crucial, with runtimes like Python and Node.js that offer suitable options for various AI capabilities.

- Real-time performance monitoring using AWS CloudWatch and X-Ray helps in debugging issues professionally and tracking system performance.

- To minimize cold start provisioning concurrency ensures that AI models are in a ready-to-use state.

- Leveraging Edge AI with AWS Lambda@Edge allows models to be deployed closer to end-users increasing the availability and reducing latency.

Conclusion

AWS Lambda is helpful when it comes to AI inference and model execution. For those preparing for the AWS Certified AI Practitioner Certification (AIF-C01), becoming proficient at AWS Lambda can significantly change their AI deployment approaches and ways. We provide a package of practice tests, video courses, Hands-on labs, and sandboxes exclusively tailored to meet our learners’ requirements and help them gain the appropriate skill and knowledge. This will contribute to uplifting AWS Lambda’s serverless architecture, allowing developers to build efficient, intuitive, and intelligent real-time AI applications.

Therefore, capitalizing on AI-driven innovation in the cloud and understanding it empowers AI practitioners to design robust, scalable, and cost-effective AI applications. Talk to our experts in case of queries!

- Top 10 Topics to Master for the AI-900 Exam - July 10, 2025

- SC-401 Prep Guide: Become a Security Admin - June 28, 2025

- How Does AWS ML Associate Help Cloud Engineers Grow? - June 27, 2025

- Top 15 Must-Knows for AWS Solutions Architect Associate Exam - June 24, 2025

- How I Passed the AI-900 as a Non-Techie and How You Can Too - June 19, 2025

- Top Hands-On Labs and Projects for MLS-C01 Success - June 17, 2025

- Understanding AWS Services for CLF-C02: A Beginner’s Guide - June 13, 2025

- Top 5 Cloud Certifications to Earn in 2025 - June 5, 2025