In this blog post, we will guide you through the process of becoming a NVIDIA Certified Associate Generative AI and LLMs (NCA-GENL) certification. This certification is a valuable credential for professionals aiming to demonstrate their expertise in the rapidly evolving fields of generative artificial intelligence and large language models.

Whether you’re looking to advance your career, enhance your skills, or gain a competitive edge in the AI industry, this guide will provide you with all the information you need to successfully achieve the NCA Generative AI LLMs (NCA-GENL) certification.

Our NVIDIA-Certified Associate Generative AI LLMs study guide provides insights on:

- How to pass the Nvidia GenAI LLMS Exam on the first try?

- What are the topics expected in the Nvidia GenAI LLMS Certification Exam?

- How difficult is it to pass the Nvidia GenAI LLMS exam?

Let’s dive in!

All about NVIDIA-Certified Associate: Generative AI LLMs (NCA-GENL) certification

This NVIDIA certification exam in Generative AI and LLMs is an entry-level certification designed to assess your foundational skills in Generative AI and LLMs with NVIDIA solutions areas.

To pass the NCA Generative AI LLMs (NCA-GENL) exam, you must focus on the following key topics:

- Basic concepts of machine learning and neural networks

- Engineering effective prompts

- Alignment strategies

- Data analysis and visualization techniques

- Experimentation methodologies

- Preprocessing data and feature engineering

- Designing experiments

- Software development principles

- Utilization of Python libraries for LLMs

- Integration and deployment of LLMs

By mastering these topics, you’ll be well-prepared to excel in the NVIDIA Certified Associate exam.

Let’s explore the NCA Generative AI LLMs Exam Syllabus in detail:

Advanced Topics: A large portion of the Nvidia-certified associate generative AI LLMS exam questions cover advanced key topics including CuDF data frames, XGBoost GPU-accelerated machine learning, cuGraph graph analysis, and RAPIDS data science pipelines.

It is better to focus on these topics while preparing for NVIDIA Certified Associate in Generative AI and LLMs:

- NVIDIA Hardware (GPUs)

- NVIDIA Platforms (NEO, Jetson)

- Seminal Research Papers (e.g., “Attention Is All You Need”, Word2Vec)

- Machine Learning Fundamentals

- Data Science Principles

- Probability Theory

- Natural Language Processing (NLP)

- Transformers and LSTM Networks

- Activation Functions

- Gradient Descent and Related Concepts

Core AI Principles and Nvidia-Specific Knowledge: The remaining portion of the exam focused on fundamental AI concepts, including neural network basics, Nvidia’s infrastructure and AI development services, memory mapping techniques for machine learning, and mastering the skill of effective prompting in AI applications.

In-Depth Focus: Particularly challenging were the questions on AI model quantization and the application of the transformer model. So, it is better to focus on this area more to tackle the challenges.

NVIDIA Certified Associate Generative AI LLMs Exam Technical Content Coverage

NVIDIA Certified Associate in Generative AI and LLMs Exam assesses your proficiency in these areas:

- You can expect 10% of the Nvidia GenAI LLMS Exam questions from general deep learning concepts, such as support vector machines (SVM), exploratory data analysis (EDA), and activation and loss functions.

- Another 10% delved into the transformer architecture, covering topics like encoding, decoding, and attention mechanisms.

- Approximately 40% of the Nvidia GenAI LLMs exam syllabus centered around working with models in the natural language processing (NLP) and large language models (LLMs) space. This includes questions on text normalization techniques (e.g., stemming and lemmatization), embedding mechanics (e.g., WordNet vs. word2vec), Python libraries (e.g., spaCy), NLP evaluation frameworks (e.g., GLUE), and interoperability standards (e.g., ONNX). Hence, spending more time on this topic can be significant.

- Only 40% of the exam content aligned with actively studied topics, with a focus on customization, TensorRT, Triton Inference Server, and optimization techniques for GPUs, CPUs, and memory in the Nvidia stack. There were a few questions directly related to products like DGX, AI Enterprise, and NeMo, with broader inquiries on the usage of cuDF, cuML, and the NGC catalog in practice.

While NVIDIA didn’t disclose the exact subtopics they ask for in the exam, they specifically mentioned the certification is for associate-level developers who possess foundational knowledge of Generative AI and LLMs.

How to start studying for the NVIDIA Certified Associate: Generative AI LLMs (NCA-GENL) Exam?

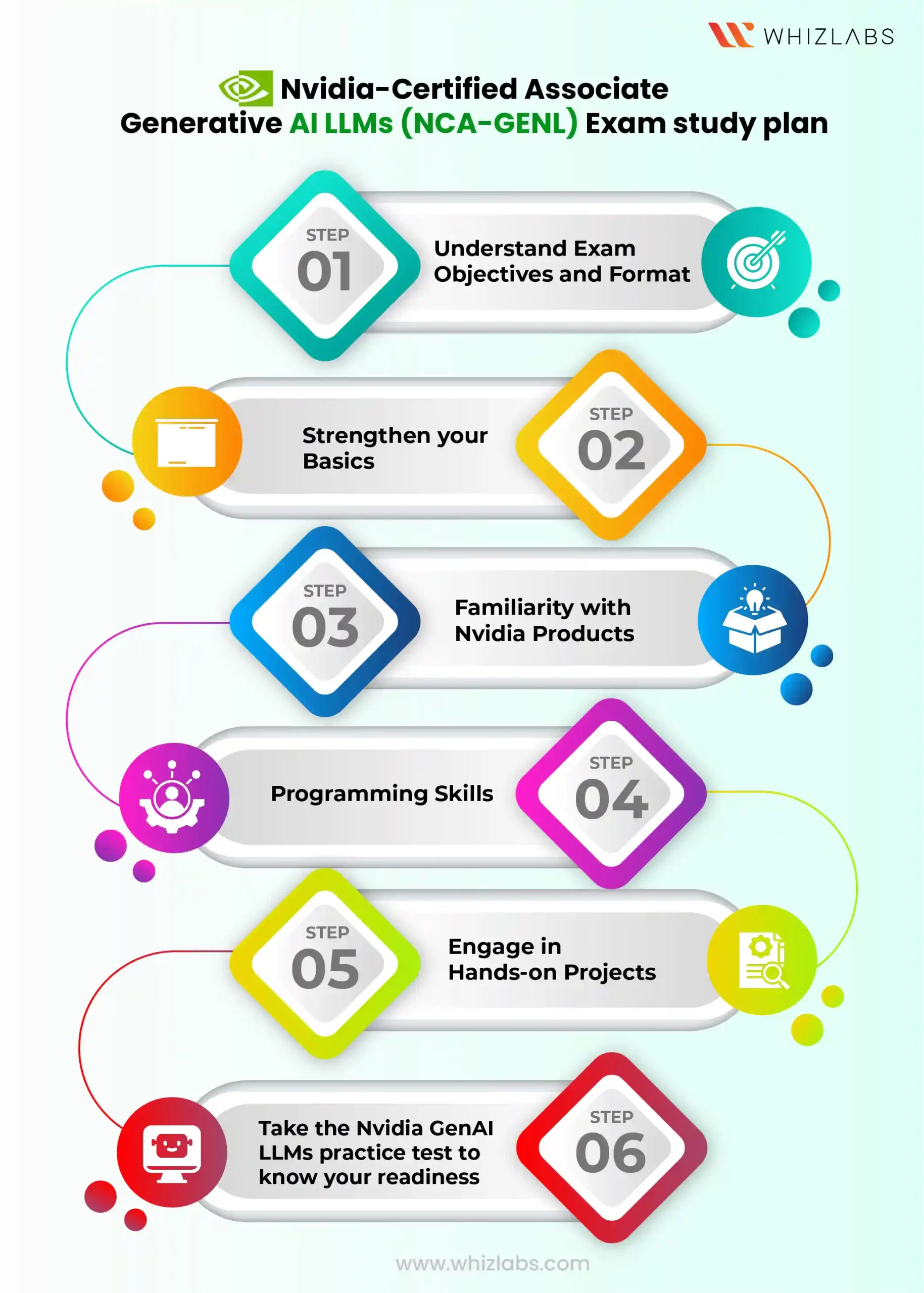

To kickstart your preparation for the NVIDIA Certified Associate: Generative AI LLMs (NCA-GENL) Exam on your first attempt, follow this step by step guide:

Step 1: Understand exam objectives and format

The first step in preparing for the NVIDIA-Certified Associate: Generative AI LLMs (NCA-GENL) exam is to thoroughly understand its objectives and format. This foundational knowledge will help you tailor your study plan and focus on the key areas necessary for success.

Visit the official NVIDIA website to access detailed exam objectives and guidelines.

Here is the NVIDIA-Certified Associate: Generative AI LLMs (NCA-GENL) Exam Format:

| Duration | One hour |

| Price | $135 |

| Certification level | Associate |

| Subject | Generative AI and large language models |

| Number of questions | 50 |

| Prerequisites | Basic understanding of generative AI and large language models |

| Language | English |

NVIDIA Gen AI and LLMs certification has a time limit of 60 minutes for 50 questions designed to test your understanding. The questions cover the technical aspects of Generative AI and LLMs, their implementation, and NVIDIA solutions.

You must familiarize yourself with the above formats and objectives before proceeding with other steps in your preparation process.

Based on the exam objectives and your current knowledge level, outline a study plan that covers all necessary topics.

Step 2: Strengthen your basics

To start your Nvidia Generative AI LLMS journey, it’s essential to establish a strong foundation in Nvidia Products. Begin by grasping fundamental concepts and techniques that how machine learning algorithms are built on.

Resources like online courses, textbooks, and interactive projects can aid in this foundational understanding.

Nvidia offers various free introductory and deep dive courses, some of which are instructor-led and rather pricey.

Here are some fundamental and advanced courses released by Nvidia:

- Foundational Deep Learning: Beginners and individuals seeking to refresh their knowledge can explore courses such as “Basics of Deep Learning” on Udemy and “Deep Learning Fundamentals” by Lightning AI, providing a robust understanding of deep learning principles and their applications across diverse industries.

- Accelerated Data Science: Delve into courses like “AI Application Boost with Nvidia RAPIDS Acceleration” to learn how to optimize data science tasks with GPU acceleration, fostering accelerated data processing and analysis.

- Natural Language Processing Proficiency: You can enhance your NLP skills with the comprehensive “Hugging Face NLP Course,” offering practical insights into building applications using Transformer models. Additionally, gain a practical understanding of BERT through resources like the “BERT NLP Presentation” on Scribd.

- Customizing Large Language Models: Advanced learners can explore the “Large Language Model Customization Course” on Udemy, focusing on tailoring large language models to specific requirements and applications.

- Building Retrieval-Augmented Generative Models: Dive into the intricacies of creating complex AI agents with the “Building Agentic RAG with LLAMA” course on DeepLearning.AI, offering valuable insights into retrieval-augmented generation techniques.

You can also read some e-books such as:

Step 2: Familiarity with Nvidia Products

Mastering the Nvidia Generative AI LLMs certification requires a comprehensive grasp of a range of Nvidia products and solutions crucial to AI and machine learning. These include:

- NeMo: An open-source toolkit for conversational AI, facilitating the creation of state-of-the-art models.

- cuOPT: A GPU-accelerated software suite designed to optimize complex systems efficiently.

- TensorRT: A platform for high-performance deep learning inference.

- Nvidia Cloud: Cloud-based solutions offering flexible AI and data science computing resources.

- Nvidia DGX: A series of high-performance computing systems renowned for scaling AI training and inference workloads.

This exam requires a complete blend of aptitude and application, demanding an extensive exploration of modern AI concepts. It underscores the necessity not just to grasp acquired knowledge but also to effectively implement it in real-world scenarios.

Step 3: Programming skills

Nvidia Generative AI LLMs exam typically requires coding skills, especially in programming languages commonly used in machine learning and artificial intelligence, such as Python.

While there are some user-friendly tools and platforms that allow users to create generative AI models with minimal coding, a deeper understanding of programming concepts is often necessary to develop and customize more complex models.

In particular, knowledge of libraries and frameworks like TensorFlow, PyTorch, or Keras is valuable for implementing generative AI algorithms. Additionally, proficiency in handling data, understanding algorithms, and debugging code is essential for effectively working with generative AI techniques.

Step 4: Engage in Hands-on Projects

Theory alone can only take you so far. It’s essential to put your knowledge into practice by immersing yourself in hands-on projects and challenges.

You must know how to create Generative AI models that can generate art, synthesize images, or produce music. Experiment with different datasets and fine-tune models to gain insights into their behavior and limitations with Whizlabs hands-on labs.

Step 5: Take the Nvidia GenAI LLMs practice test to know your readiness

Prepare yourself to excel in the NVIDIA-Certified Associate Generative AI LLMs exam with our comprehensive practice tests. These tests are designed to assess your knowledge and skills in the field of generative AI, covering all essential topics and concepts necessary for passing the certification exam with confidence.

Once you have scored above 80% on the practice test, then you can appear for the real exam with confidence.

How hard is it to pass the NVIDIA Certified Associate Generative AI and LLMs Certification Exam?

The difficulty level of passing the NVIDIA-Certified Associate: Generative AI and LLMs Certification (NCA-GENL) can be moderate to challenging for individuals who are new to the concepts of generative AI and large language models (LLMs).

You must have an understanding of a wide range of topics, including fundamentals of deep learning, natural language processing (NLP) applications, model customization, and retrieval-augmented generation.

Moreover, you must have dedicated study and hands-on experience with AI technologies, as well as familiarity with NVIDIA solutions and tools to pass this exam with flying colors.

Overall, while the exam may present challenges, with thorough preparation, practice, and a clear understanding of the exam objectives, candidates can overcome these challenges and pass the certification successfully.

FAQs

1. What is the NVIDIA Certified Associate in Generative AI and LLMs Exam?

The NVIDIA Certified Associate in Generative AI and LLMs Exam is a certification designed to assess your knowledge and skills in generative artificial intelligence and large language models, along with proficiency in NVIDIA’s AI tools and frameworks.

2.How much time does it take to learn NCA Generative AI LLMs exam?

It may vary depending on the individual knowledge and skills. However, it is recommended to spend 2-3 hours a week to get a complete understanding of the exam concepts.

3.Who should take this NVIDIA Associate Gen AI exam?

This exam is ideal for AI professionals, data scientists, machine learning engineers, and anyone looking to validate their expertise in generative AI and LLMs, as well as their ability to use NVIDIA’s AI technologies effectively.

4. What topics are covered in the exam?

The exam covers a range of topics, including:

- General deep learning concepts (10%)

- Transformer architecture (10%)

- Natural language processing (NLP) and large language models (LLMs) (40%)

- NVIDIA-specific tools and optimization techniques (40%)

5. What is the duration of the NVIDIA Certified Associate in Generative AI and LLMs exam?

The duration of the NVIDIA Associate in Generative AI & LLMs exam is about 60 minutes.

6. What is the exam cost of NVIDIA Certified Associate in Generative AI and LLMs exam?

The exam cost is USD 135.

Sample Practice Test Questions

Domain: Experimentation

Question 1: You are comparing two LLMs (Large Language Models) using BLEU (BiLingual Evaluation Understudy) metric. You have found that, one model has a higher BLEU score compared to another. What does it indicate?

A. LLM model has a broader vocabulary.

B. LLM shows a better understanding of complex sentence structures.

C. The model produces text that is very similar to the example text it’s supposed to match.

D. LLM models higher computational efficiency

Correct Answers: C

Explanation: BLEU (Bilingual Evaluation Understudy) is a metric for automatically evaluating machine-translated text. The BLEU score is a number between zero and one that measures the similarity of the machine-translated text to a set of reference translations. A higher BLEU score indicates better translation quality.

Option A is incorrect. While a diverse vocabulary can contribute to a higher BLEU score, it’s not the only factor at play. BLEU primarily evaluates the similarity between generated text and reference texts based on n-grams and does not directly measure the breadth of vocabulary.

Option B is incorrect. While BLEU does consider the structure of sentences, it primarily focuses on the matching of n-grams between the generated text and reference texts.

Option C is correct. A higher BLEU score typically indicates that the generated text closely resembles the reference texts it’s supposed to match. BLEU calculates precision by comparing n-grams between the generated text and reference texts, thereby quantifying the similarity.

Option D is incorrect. Computational efficiency refers to the speed and resource utilization of a language model, which BLEU does not measure. BLEU focuses solely on evaluating the quality of generated text compared to reference texts.

Reference: To learn more about BLEU score, refer to the following link

Domain: Software Development

Question 2: What additional complexity does a retrieval augmented generation (RAG) architecture introduce to an LLM-enabled application?

- It requires extensive logging and alerting mechanisms.

- It involves rate-limiting queries and restricting query budgets.

- It allows the LLM to access and generate responses from sensitive documents.

- It necessitates careful handling of authorization and document permissions.

Correct Answers: D

Explanation: Option D is correct. A retrieval augmented generation (RAG) architecture introduces additional complexity by requiring careful handling of authorization and document permissions to ensure compliance with security and privacy requirements.

Option A is incorrect. While Extensive logging and alerting mechanisms are generally important for any application handling sensitive data or interacting with users, they are not unique to RAG architectures.

Option B is incorrect. Rate-limiting queries and restricting query budgets are common practices in managing resources and preventing abuse in systems that interact with external APIs or databases. While these measures may be relevant for optimizing performance and ensuring fairness in an LLM-enabled application, they do not specifically relate to the additional complexity introduced by a retrieval augmented generation (RAG) architecture.

Option C is incorrect. While accessing and generating responses from sensitive documents can indeed raise security and privacy concerns, it does not address the unique complexities introduced by the architecture itself.

Option D is correct. In RAG architectures, the LLM may retrieve information from external sources, such as databases or document repositories, to generate responses. This necessitates careful consideration of authorization mechanisms and document permissions to ensure that the LLM accesses only authorized information and adheres to privacy and security requirements.

Reference: To learn more about this refer to the following link –

https://developer.nvidia.com/blog/best-practices-for-securing-llm-enabled-applications/

Wrapping Up

I hope this NVIDIA-Certified Associate Generative AI LLMs study guide provides you with the necessary information to prepare for the exam.

Preparing for the Nvidia GenAI LLMs exam can feel daunting and time-consuming, especially when trying to gather relevant resources and structure study materials.

Don’t worry! Whizlabs is here to alleviate those challenges. Our platform offers reliable and updated study materials such as practice tests to help in your preparation journey.

We also have guided hands-on labs and cloud sandboxes, allowing you to practice and apply your knowledge effectively.

- 7 Pro Tips for Managing and Reducing Datadog Costs - June 24, 2024

- Become an NVIDIA Certified Associate in Generative AI and LLMs - June 12, 2024

- What is Azure Data Factory? - June 5, 2024

- An Introduction to Databricks Apache Spark - May 24, 2024

- What is Microsoft Fabric? - May 16, 2024

- Which Kubernetes Certification is Right for You? - April 10, 2024

- Top 5 Topics to Prepare for the CKA Certification Exam - April 8, 2024

- 7 Databricks Certifications: Which One Should I Choose? - April 8, 2024