A Google Cloud Certified Professional Cloud Architect enables organisations to leverage Google Cloud Technologies.They possess a thorough understanding of Google Cloud, its architecture and are capable of designing and developing robust, secure and scalable dynamic solutions to drive business objectives.

What does a professional cloud architect do?

Google cloud professional cloud architect understands the cloud environment and google technology and enables companies to make use of google cloud services. The role of the cloud architect is as follows:

- He designs cloud solutions according to the client’s needs.

- Once the solution is designed he implements the cloud solutions

- Develop secure, scalable, and reliable cloud solutions

- He manages the multi tired distributed applications spans around multi & hybrid cloud

The Google Certified Professional Cloud Architect certification assesses your ability to

- Design and plan a cloud solution architecture

- Manage and provision the cloud solution infrastructure

- Design for security and compliance

- Analyze and optimize technical and business processes

- Manage implementations of cloud architecture

- Ensure solution and operations reliability

It is always highly recommended to go through practice exams / practice questions to make yourself familiar with the real exam pattern. Whizlabs offers a great set of practice questions for this certification exam.

Please find the below set as a test exercise that might help you to understand the exam pattern.

Q No. 1 For this question, refer to the Terram Earth case study.

Terram Earth receives daily data in the Cloud using network interconnects with private on-premises data centers.A subset of the data is transmitted and processed in real time and the rest daily, when the vehicles return to home base. You have been asked to prepare a complete solution for the ingestion and management of this data, which must be both fully stored and aggregated for analytics with Bigquery.

Which of the following actions do you think is the best solution (pick 2)?

A. Real-time data is streamed to BigQuery, and each day a job creates all the required aggregate processing

B. Real-time data is sent via Pub / Sub and processed by Dataflow that stores data in Cloud Storage and computes the aggregates for BigQuery.

C. The Daily Sensor data is uploaded to Cloud Storage with parallel composite uploads and at the end with a Cloud Storage Trigger a Dataflow procedure is activated

D. Daily Sensor data is loaded quickly with BigQuery Data Transfer Service and processed on demand via job

Correct answer: B, C

Pub/Sub is the solution recommended by Google because it provides flexibility and security. Flexibility because, being loosely coupled with a publish / subscribe mechanism, it allows you to modify or add functionality without altering the application code.

Security because it guarantees reliable, many-to-many, asynchronous messaging with at-least-once delivery.

Uploading to both Cloud Storage and Bigquery is important because you want to store the data both in its entirety and in aggregate form.

Parallel composite uploads are recommended because the daily files are of considerable size (200 to 500 megabytes).

Using Dataflow allows you to manage processing in real-time and to use the same procedures for daily batches.

- A is wrong because it stores data only in BigQuery and does not provide real-time processing when the requirements are to have both global and aggregated data.

- D is wrong because, also here, data is stored only in BigQuery and because BigQuery Data Transfer Service involves passing through cloud sources, not from on-premise archives. It also doesn’t talk about how the data is decompressed and processed.

Q No. 2 To ensure that your application will handle the load even if an entire zone fails, what should you do? Select all correct options.

A. Don’t select the “Multizone” option when creating your managed instance group.

B. Spread your managed instance group over two zones and overprovision by 100%. (for Two Zone)

C. Create a regional unmanaged instance group and spread your instances across multiple zones.

D. Overprovision your regional managed instance group by at least 50%. (for Three Zones)

Correct answer B and D

Feedback

B is correct if one zone fails you still have 100% desired capacity in another zone

C is incorrect because it won’t be able to handle the full load since, it’s unmanaged group and won’t auto scale accordingly.

D is correct since you have at least total 150% desired capacity spread over 3 zones, each zone has 50% capacity. You’ll have 100% desired capacity in two zones if any single zone failed at given time.

If you are creating a regional managed instance group in a region with at least three zones, Google recommends overprovisioning your instance group by at least 50%.

Q No. 3 For this question, refer to the EHR Healthcare case study.

The case study explains that: EHR hosts several legacy file-based and API integrations with on-site insurance providers, which are expected to be replaced in the coming years. Hence, there is no plan to upgrade or move these systems now.

But EHR wants to use these APIs from its applications in Google Cloud so that they remain on-premise and private, securely exposing them.

In other words, EHR wants to protect these APIs and the data they process, connect them only to its VPC environment in Google Cloud, with its systems in a protected DMZ that is not accessible from the Internet. Providers will be able to access integrations only through applications and with all possible precautions.

Which technique allows you to fulfill these requirements?

A. Gated Egress and VPC Service Controls

B. Cloud Endpoint

C. Cloud VPN

D. Cloud Composer

Correct Answer: A

Gated egress topology lets APIs in on-premise environments be available only to processes inside Google Cloud without direct public internet access.

Applications in Google Cloud communicate with APIs in on-premise environments only with private IP addresses and are eventually exposed to the public via an Application Load Balancer and using VPC Service Controls.

VPC Service Controls create additional security for Cloud applications:

- Isolate services and data

- Monitor against data theft and accidental data loss

- Restrict access to authorized IPs, client context, and device parameters

- B is wrong because Cloud Endpoint is an API Gateway that could create an application facade as required. But Cloud Endpoint does not support on-premises endpoints.

- C is wrong because Cloud VPN is just a way to connect the local network to a VPC.

- D is wrong because Cloud Composer is a workflow management service.

Q No. 4 For this question, refer to the Helicopter Racing League (HRL) case study.

Helicopter Racing League (HRL) wants to migrate their existing cloud service to a new platform with solutions that allow them to use and analyze video of the races both in real-time and recorded for broadcasting, on-demand archive, forecasts, and deeper insights.

There is the need to migrate the recorded videos from another provider without service interruption.

The idea is to switch immediately the video service to GCP while migrating selected contents.

Users cannot directly access the contents wherever they are stored, but only through the correct and secure procedure specially setup.

Which of the following strategies do you think could be feasible for serving the contents and migrating the videos with minimal effort (pick 3)?

A. Use Cloud CDN with internet network endpoint group

B. Use a Cloud Function that can fetch the video from the correct source

C. Use Apigee

D. Use Cloud Storage Transfer Service

E. Use Cloud Storage streaming service

F. Use Google Transfer appliance

Correct Answers: A, C and D

(A) Cloud CDN can serve content from external backends (on-premises or in another cloud).

External backends are also called custom origins; their endpoints are called NEG, network endpoint group.

So the content URL is masked and the origin must be accessible only with the CDN service.

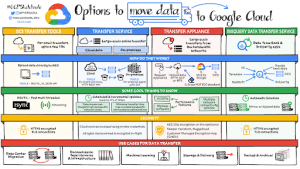

(D) For Video Migration, since they are stored in a Cloud Provider, the best service is Cloud Storage Transfer Service, because it is aimed to perform large-scale data transfer between on-premises and multi-cloud over online networks with 10s of Gbps. Easy and fast.

(C) Apigee is the most powerful GCP Api Management and it is capable of managing application services in GCP, on-premises, or in a multi-cloud.

- B is wrong because it is complicated, it will not work well and you need to write code for this solution.

- E. is wrong because the Cloud Storage streaming service is able to acquire the streaming data without having to archive the file first. It is used when you need to upload data from a process or on-the-fly.

- F. is wrong because Google Transfer Appliance is when you have to transfer a large amount of data stored locally; so, it is faster to ship a storage device (Google Transfer Appliance) without the use of telecommunication lines.

Q No. 5 A digital Media company has recently moved its infrastructure from On-premise to Google Cloud, they have several instances under a Global HTTPS load balancer, a few days ago the Application and Infrastructure were subjected to DDOS attacks, they are looking for a service that would provide a defense mechanism against the DDOS attacks. Please select the relevant service.

A. Cloud Armor

B. Cloud-Identity Aware Proxy

C. GCP Firewalls

D. IAM policies

Answer: Option A is the CORRECT choice because Cloud Armor delivers defense at scale against infrastructure and application Distributed Denial of Service (DDoS) attacks using Google’s global infrastructure and security systems.

Option B is INCORRECT because, Cloud-Identity Aware Proxy lets you establish a central authorization layer for applications accessed by HTTPS, so you can use an application-level access control model instead of relying on network-level firewalls.

Option C is INCORRECT because GCP firewalls rules don’t apply for HTTP(S) Load Balancers, while Cloud Armor is delivered at the edge of Google’s network, helping to block attacks close to their source.

Option D. IAM policies doesn’t help in mitigating DDOS attacks.

Q No. 6 You work in an international company and manage many GCP Compute Engine instances using SSH and RDS protocols.The management, for security reasons, asks you that VMs cannot have multiple public IP addresses. So you are actually no longer able to manage these VMs.

How is it possible to manage in a simple and secure way, respecting the company rules, access and operations with these systems?

A. Bastion Hosts

B. Nat Instances

C. IAP’s TCP forwarding

D. Security Command Center

Correct Answer: C

IAP- Identity-Aware Proxy is a service that lets you use SSH and RDP on your GCP VMs from the public internet, wrapping traffic in HTTPS and validating user access with IAM.

Inside GCP there is a Proxy server with a listener that translates the communication and lets you operate in a safe way without the public exposure of your GCP resources.

- A is wrong because a Bastion Host needs a Public IP, so it is not feasible.

- B is wrong because a Nat Instance needs a Public IP, too. In addition, it is aimed at outgoing connectivity to the internet, blocking inbound traffic, thus preventing exactly what we need.

- D is wrong because Security Command Center is a reporting service for security that offers monitoring against vulnerabilities and threats.

Q No. 7 For this question, refer to the Helicopter Racing League (HRL) case study.

Helicopter Racing League (HRL) wants to create and update predictions on the results of the championships, with data that collects during the rages. HRL wants to create long-term Forecasts with the data from video collected both while taking (first processing) and during streaming for users. HLR want to exploit also existing video content that is stored in object storage with their existing cloud provider

On the advice of the Cloud Architects, they decided to use the following strategies:

A. Creating experimental forecast models with minimal code in the powerful GCP environment, using also the data already collected

B. The ability and culture to develop highly customized models that are continuously improved with the data that it gradually collects. They plan to try multiple open source frameworks

C. To Integrate teamwork and create/optimize MLOps processes

D. To Serve the models in an optimized environment

Which of the following GCP services do you think are the best given these requirements?

A. Video Intelligence

B. TensorFlow Enterprise and KubeFlow for the customized models

C. BigQuery ML

D. Vertex-AI

E. Kubernetes and TensorFlow Extend

Correct Answer: D

All the answers are correct, but the best solutions are:

Vertex AI is a platform that integrates multiple ML tools and lets you improve MLOps pipelines aimed at model maintenance and improvement.

Vertex AI exploits Auto ML Video that can create experimental forecast models with minimal or no code, even with external data. Usually, data is imported in Cloud Storage, so as to obtain minimal latency.

Vertex AI can build and deploy models developed with many open source frameworks and supports continuous modeling and retraining using TensorFlow Extended and Kubeflow Pipelines.

In addition offers services for Feature engineering, hyperparameter tuning, model serving, tuning, and model understanding.

- A is wrong because GCP Video Intelligence API is composed of pre-trained machine learning models for the recognition of items, places, and actions. It lacks the personalized features the HLR needs.

- B is wrong because TensorFlow Enterprise and KubeFlow cover only the requirements for highly customized models and MLOps.

- C is wrong because for BigQuery ML you need to transform data and it can integrate customized models but not develop them.

- E is wrong because Kubernetes and Tensorflow can develop and serve customized models but they are not the right tools for easy experimentations.

Q No. 8 Helicopter Racing League (HRL) offers premium contents and, among their business requirements, has:

-

To increase the number of concurrent viewers and

-

To create a merchandising revenue stream

So, they want to offer service subscriptions for their and partner services and manage monetization, pay-as-use management, flat-use control, and rate-limiting. All the functionalities that can assure a managed revenue stream in the simplest way.

Which is the best GCP Service to achieve that?

A. Cloud Endpoints

B. Apigee

C. Cloud Tasks

D. Cloud Billing

E. API Gateway

Correct Answer: B

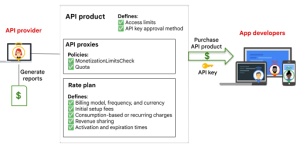

Apigee is the GCP top product for API management.

It offers all the functionalities requested: monetization, traffic control, throttling, security and hybrid (third -parties) integration.

GCP offers 3 different products for API management: Apigee, Cloud Endpoints (only GCP) and API Gateway (for Serverless workloads).

- A is wrong because Cloud Endpoints is an API product, too but doesn’t support monetization and hybrid

- C. is wrong because Cloud Tasks is a dev tool for thread management

- D is wrong because Cloud Billing is for GCP services accounting, billing and reporting, not for end-user services

- E is wrong because API Gateway is an API product, too but doesn’t support monetization and hybrid

Q No. 9 For this question, refer to the TerramEarth case study. TerramEarth needs to migrate legacy monolithic applications into containerized RESTful microservices. The development team is experimenting with the use of packaged procedures with containers in a completely serverless environment, using Cloud Run. Before migrating the existing code into production it was decided to perform a lift and shift of the monolithic application and to develop the new features that are required with serverless microservices.

So, they want to carry out a gradual migration, activating the new microservice functionalities while maintaining the monolithic application for all the other activities. The problem now is how to integrate the legacy monolithic application with the new microservices to have a consistent interface and simple management.

Which of the following techniques can be used (pick 3)?

A. Use an HTTP(S) Load Balancer

B. Develop a proxy inside the monolithic application for integration

C. Use Cloud Endpoints/Apigee

D. Use Serverless NEGs for integration

E. Use App Engine flexible edition

Correct answers: A, C and D

The first solution (A+D) uses HTTP(S) Load Balancing and NEGs.

Network endpoint groups (NEG) let you design serverless backend endpoints for external HTTP(S) Load Balancing. Serverless NEGs became target proxies and the forwarding is performed with the use of URL maps. In this way, you may integrate seamlessly with the legacy application.

An alternative solution is API Management, which creates a facade and integrates different applications. GCP has 3 API Management solutions: Cloud Endpoints, Apigee, and API Gateway. API Gateway is only for serverless back ends.

- B is wrong because developing a proxy inside the monolithic application for integration means, keep on updating the old app with possible service interruptions and useless toil.

- E is wrong because App Engine’s flexible edition manages containers but cannot integrate the legacy monolithic application with the new functions.

Q No. 10 Your company has reserved a monthly budget for your project. You want to be informed automatically of your project spend so that you can take action when you approach the limit. What should you do?

A. Link a credit card with a monthly limit equal to your budget.

B. Create a budget alert for desired percentages such as 50%, 90%, and 100% of your total monthly budget.

C. In App Engine Settings, set a daily budget at the rate of 1/30 of your monthly budget.

D. In the GCP Console, configure billing export to BigQuery. Create a saved view that queries your total spend.

Correct answer B

Feedback

A is not correct because this will just give you the spend but will not alert you when you approach the limit.

B Is correct because a budget alert will warn you when you reach the limits set.

C Is not correct because those budgets are only on App Engine, not other GCP resources. Furthermore, this makes subsequent requests fail, rather than alert you in time so you can mitigate appropriately.

D is not correct because if you exceed the budget, you will still be billed for it. Furthermore, there is no alerting when you hit that limit by GCP.

Q No. 11 For this question, refer to the Mountkirk Games case study.

MountKirk Games uses Kubernetes and Google Kubernetes Engine. For the management, it is important to use an open platform, cloud-native, and without vendor lock-ins. But they also need to use advanced APIs of GCP services and want to do it securely using standard methodologies, following Google-recommended practices but above all efficiently with maximum security.

Which of the following solutions would you recommend?

A. API keys

B. Service Accounts

C. Workload identity

D. Workload identity federation

Correct Answer: C

The preferred way to access services in a secured and authorized way is with Kubernetes service accounts, which are not the same as GCP service accounts.

With Workload Identity, you can configure a Kubernetes service account so that workloads will automatically authenticate as the corresponding Google service account when accessing GCP APIs.

Moreover, Workload Identity is the recommended way for applications in GKE to securely access GCP APIs because it lets you manage identities and authorization in a standard, secure and easy way.

- A is wrong because API keys offer minimal security and no authorization, just identification.

- B is wrong because GCP Service Accounts are GCP proprietary. Kubernetes is open and works with Kubernetes service accounts.

- D is wrong because Workload identity federation is useful when you have an external identity provider such as Amazon Web Services (AWS), Azure Active Directory (AD), or an OIDC-compatible provider.

Q No. 12 When creating firewall rules, what forms of segmentation can narrow which resources the rule is applied to? (Choose all that apply)

A. Network range in source filters

B. Zone

C. Region

D. Network tags

Correct Answer A and D

Explanation

You can restrict network access on the firewall by network tags and network ranges/subnets.

Here is the console screenshot showing the options when you create firewall rules

– network tags and network ranges/subnets are highlighted

Q No. 13 Helicopter Racing League (HRL) wants to migrate their existing cloud service to the GCP platform with solutions that allow them to use and analyze video of the races both in real-time and recorded for broadcasting, on-demand archive, forecasts, and deeper insights.

During a race filming, how can you manage both live playbacks of the video and live annotations so that they are immediately accessible to users without coding (pick 2)?

A.Use HTTP protocol

B. Use Video Intelligence API Streaming API

C. Use DataFlow

D. Use HLS protocol

E. Use Pub/Sub

Correct Answers: B and D

- D is correct because HTTP Live Streaming is a technology from Apple for sending live and on‐demand audio and video to a broad range of devices.

It supports both live broadcasts and prerecorded content, from storage and CDN.

- B is correct because Video Intelligence API Streaming API is capable of analyzing and getting important metadata from live media, using the AIStreamer ingestion library.

- A is wrong because HTTP protocol alone cannot manage live streaming video.

- C is wrong because Dataflow manages streaming data pipelines but cannot derive metadata from binary data, unless you use customized code.

- E is wrong because Pub/Sub could ingest metadata, but not analyze and getting labels and other info from videos.

Q No.14 What is the best practice for separating responsibilities and access for production and development environments?

A. Separate project for each environment, each team only has access to their project.

B. Separate project for each environment, both teams have access to both projects.

C. Both environments use the same project, but different VPC’s.

D. Both environments use the same project, just note which resources are in use by which group.

Correct Answer A

Explanation

A (Correct answer) – Separate project for each environment, each team only has access to their project.

For least privilege and separation of duties, the best practice is to separate both environments into different projects, development or production team gets their own accounts, and each team is assigned to only their projects.

The best practices:

- You should not use same account for both Development and production environments regardless how do you create projects inside that account for different environments. You should use different account for each environment which associated with different group of users. You should use project to isolate user access to resource not to manage users.

- Using a shared VPC allows each team to individually manage their own application resources, while enabling each application to communicate between each other securely over RFC1918 address space. So VPC’s isolate resources but not user/service accounts.

B, C, and D are incorrect

Answer B is the scenario that use same account for both development and production environments attempting to isolate user access with different projects

Answer C is the scenario that use same account for both development and production environments with same project attempting to isolate user access with network separation.

Answer D is the scenario that use same account for both development and production environments with same project attempting to isolate user access with user group at resource level.

You may grant roles to group of users to set policies at organization level, project level, or (in some cases) the resource (e.g., existing Cloud Storage and BigQuery ACL systems as well as and Pub/Sub topics) level.

The best practice: Set policies at the Organization level and at the Project level rather than at the resource level. This is because as new resources get added, you may want them to automatically inherit policies from their parent resource. For example, as new Virtual Machines gets added to the project through auto scaling, they automatically inherit the policy on the project.

Q No. 15 What is the command for creating a storage bucket that has once per month access and is named ‘archive_bucket’?

A. gsutil rm -coldline gs://archive_bucket

B. gsutil mb -c coldline gs://archive_bucket

C. gsutil mb -c nearline gs://archive_bucket

D. gsutil mb gs://archive_bucket

Correct answer C

mb is to make the bucket. Nearline buckets are for once per month access. Coldline buckets require only accessing once per 90 days and would incur additional charges for greater access.

Further Explanation

Synopsis

gsutil mb [-c class] [-l location] [-p proj_id] url…

If you don’t specify a -c option, the bucket is created with the default storage class Standard Storage, which is equivalent to Multi-Regional Storage or Regional Storage, depending on whether the bucket was created in a multi-regional location or regional location, respectively.

If you don’t specify a -l option, the bucket is created in the default location (US). -l option can be any multi-regional or regional location.

Q No. 16 You need to deploy an update to an application in Google App Engine. The update is risky, but it can only be tested in a live environment. What is the best way to introduce the update to minimize risk?

A. Deploy a new version of the application but use traffic splitting to only direct a small number of users to the new version.

B. Deploy the application temporarily and be prepared to pull it back if needed.

C. Warn users that a new app version may have issues and provide a way to contact you if there are problems.

D. Create a new project with the new app version, then redirect users to the new version.

Correct Answer A

Explanation

A (Correct Answer) – Deploying a new version without assigning it as the default version will not create downtime for the application. Using traffic splitting allows for easily redirecting a small amount of traffic to the new version and can also be quickly reverted without application downtime

B – Deploy the application temporarily and be prepared to pull it back if needed. Deploying the application new version as default requires moving all traffic to the new version. This could impact all users and disable the service during the new version’s live time.

C – Warn users that a new app version may have issues and provide a way to contact you if there are problems. We won’t recommend this practice.

D – Create a new project with the new app version, then redirect users to the new version.

Deploying a second project requires data synchronization and having an external traffic splitting solution to direct traffic to the new application. While this is possible, with Google App Engine, these manual steps are not required.

Q No. 17 Your team is redacting a new application that is about to go into production. During testing, it emerges that a developer code allows user input to be used to modify the application and execute commands.This event has thrown everyone into despair and has generated the fear that there are other problems of this type in the system.

Which of the following services may help you?

- Cloud Armor

- Web Security Scanner

- Security Command Center

- Shielded GKE nodes

Correct Answer: B

What you need is a service that examines your code and finds out if something is vulnerable or insecure. Web Security Scanner does exactly this: it performs managed and custom web vulnerability scanning.

It performs scans for OWASP, CIS GCP Foundation, PCI-DSS (and more) published findings.

- A is wrong because Cloud Armor is a Network Security Service, with WAF rules, DDoS and application attacks defenses.

- C is wrong because the Security Command Center suite contains Web Security Scanner and many other services.

- D is wrong because Shielded GKE nodes are special and secured VMs.

Q No.18 Your company’s development teams use, as required by internal rules, service accounts.

They just forget to delete the service accounts that are no longer used. A coordinator noticed the problem and ordered them to clean up. Now your team is faced with a huge, boring, and potentially dangerous job and has asked you for help.

What advice can you give him?

A. Service account insights

B. Cloud Audit Logs

C. Activity Analyzer

D. Flow logs

Correct Answers: A and C

The best way to find out service accounts usage are:

- Service account insights, that lists service accounts not used in the past 90 days and

- Activity Analyzer, which reports about service account’s last usages.

So they let you control the opposite aspects.

- B is wrong because Cloud Audit Logs contain audit trials, that is user activity and services modifications in GCP.

- D is wrong because Flow logs contain only network information to and from VM instances.

Q No. 19 For this question, refer to the Mountkirk Games case study.

Mountkirk Games is building a new multiplayer game that they expect to be very popular. They want to be able to improve every aspect of the game and the infrastructure. To do this, they plan to create a system for telemetry analysis. They want to minimize effort, maximize flexibility, and ease of maintenance.

They also want to be able to perform real-time analyses.

Which of the following services may help to fulfill these requirements?

A. Pub/Sub and Big Table

B. Kubeflow

C. Pub/Sub, Dataflow and BigQuery

D. Pub/Sub and Cloud Spanner

Correct Answer: C

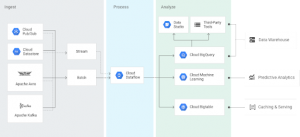

Pub/Sub ingests and stores these messages, both from the user devices or the Game Server.

Dataflow can transform data in schema-based and process it in real-time

BigQuery will perform analytics.

- A is wrong because Big Table is not the service for real-time analytics

- B is wrong because Kubeflow is used for Machine Learning pipelines.

- D is wrong because Cloud Spanner is a global SQL Database and not an analytics tool.

Q No. 20 You work for a multinational company and are migrating an Oracle database to a multi-region Spanner cluster. You have to plan the migration activities and the DBAs have told you that the migration will be almost immediate because no non-standard ISO / IEC features or stored procedures are used.

But you know that there is an element that will necessarily require some maintenance work.

Which is this element?

A. You need to drop the secondary indexes

B. You have to change most of the primary keys

C. You need to manage table partitions

D. You have to change the schema design of many tables

Correct Answer: B

With traditional SQL databases, it is advisable to use numerical primary keys in sequence. Oracle DB, for example, has an object type that creates progressive values, called a sequence.

Instead, it is important that distributed databases do not use progressive keys, because the tables are split among the nodes in primary key order and therefore all the inserts would take place only at one point, degrading performance.

This problem is called hotspotting.

- A is wrong because Spanner handles secondary indexes.

- C is wrong because Spanner automatically manages the distribution of data in the clusters.

- D is wrong because we already know that the structuring of the tables follows the standards.

Q No. 21 You need to take streaming data from thousands of Internet of Things (IoT) devices, ingest it, run it through a processing pipeline, and store it for analysis. You want to run SQL queries against your data for analysis. What services in which order should you use for this task?

A. Cloud Dataflow, Cloud Pub/Sub, BigQuery

B. Cloud Pub/Sub, Cloud Dataflow, Cloud Dataproc

C. Cloud Pub/Sub, Cloud Dataflow, BigQuery

D. App Engine, Cloud Dataflow, BigQuery

Correct Answer C

Explanation

C (Correct answer) – Cloud Pub/Sub, Cloud Dataflow, BigQuery

Cloud Pub/Sub is a simple, reliable, scalable foundation for stream analytics and event-driven computing systems. As part of Google Cloud’s stream analytics solution, the service ingests event streams and delivers them to Cloud Dataflow for processing and BigQuery for analysis as a data warehousing solution. Relying on the Cloud Pub/Sub service for delivery of event data frees you to focus on transforming your business and data systems with applications such as:

- check Real-time personalization in gaming

- check Fast reporting, targeting and optimization in advertising and media

- check Processing device data for healthcare, manufacturing, oil and gas, and logistics

- check Syndicating market-related data streams for financial services

Also, Use Cloud Dataflow as a convenient integration point to bring predictive analytics to fraud detection, real-time personalization and similar use cases by adding TensorFlow-based Cloud Machine Learning models and APIs to your data processing pipelines.

BigQuery provides a flexible, powerful foundation for Machine Learning and Artificial Intelligence. BigQuery provides integration with CloudML Engine and TensorFlow to train powerful models on structured data. Moreover, BigQuery’s ability to transform and analyze data helps you get your data in shape for Machine Learning.

Other solutions may work one way or other but only the combination of theses 3 components integrate well in data ingestion, collection, and real-time analysis, and data mining in a highly durable, elastic, and parallel manner.

A – Wrong order. You don’t normally ingest IoT data directly to DataFlow

C – DataProc is GCP version of Apache Hadoop/Spark. Although it has the SQL-like Hive, it does not provide SQL interface as sophisticated as BigQuery does.

D – App Engine is compute resources. It is not designed to ingest IoT data like PubSub. Also. It’s rare use case App Engine ingests data to DataFlow directly.

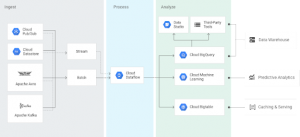

Below two pictures illustrate the typical toles played by DataFlow and PubSub

Dataflow

PubSub

Q No. 22 You work in a multinational company that is migrating to Google Cloud. The head office has the largest data center and manages a connection network to offices in various countries around the world.

Each country has its own projects to manage the specific procedures of each location, but the management wants to create an integrated organization while maintaining the independence of the projects for the various branches.

How do you plan to organize Networking?

- Peered VPC

- Cloud Interconnect

- Shared VPC

- Cloud VPN and Cloud Router

Correct Answer: C

The headquarters office manages the global network so the networking specialists mainly work over there.

Shared VPC lets create a single, global VPC organized by a central project (host project).

All the other projects (service projects) maintain their independence but they don’t have the burden of network management.

So we can have a balance between control policies at the network level and freedom to manage application projects

- A is wrong because with VPC peering there is no organization hierarchy.

- B is wrong because Cloud Interconnect is for on-premises networking.

- D is wrong because Cloud VPN and Cloud Router are used for Cloud and on-premises telecommunications.

Q No. 23 You work as an architect in a company that develops statistical studies on big data and produces reports for its customers. Analysts often allocate VMs to process data with ad hoc development procedures.

You have been called by the administrative department because they have been billed for a very large number of Compute Engine instances, which you also consider excessive in relation to operational needs.

How can you check, without controlling them one by one, which of these systems can be accidentally left active by junior technicians?

A. Use the Recommender CLI Command

B. Use Cloud Billing Reports

C. Use Idle Systems Report in GCP Console

D. Use Security Command Center Reports

Correct Answer: A

This command :

gcloud recommender recommendations list

–recommender = google.compute.instance.IdleResourceRecommender

gives to all the idle VMs based on Cloud Monitoring metrics of the previous 14 days.

There is no equivalent in the Console.

- B is wrong because Cloud Billing Reports don’t give details about activities.

- C is wrong because there is no Idle Systems Report in the GCP Console.

- D is wrong because the Security Command Center is used for Security threats, not for ordinary technical operations.

Q No. 24 You are now working for an international company that has many Kubernetes projects on various Cloud platforms. These projects involve mainly microservices web applications and are executed either in GCP or in AWS. They have many inter-relationships and there is the involvement of many teams related to development, staging, and production environments.

Your new task is to find the best way to organize these systems.

You need a solution for gaining control on application organization and networking: monitor functionalities, performances, and security in a complex environment.

Which of the following services may help you?

A. Traffic Director

B. Istio on GKE

C. Apigee

D. App Engine Flexible Edition

Correct Answer: A

What you need is Service Management with capabilities of real-time monitoring, security, and telemetry data collection in a multi-cloud microservices environment.

They are called Service Mesh.

The most popular product in this category is ISTIO, which collects traffic flows and telemetry data between microservices, enforcing security, with the help of proxies that operate without changes to application code.

Traffic Director can help in a global service mesh because it is a fully managed Service Management control plane.

With Traffic Director, you can manage on-premise and multi-cloud destinations, too.

- B is incorrect because Istio on Google Kubernetes Engine is a tool for GKE that offers automated installation and management of Istio Service Mesh. So, only inside GCP.

- C is incorrect because Apigee is a powerful tool for API Management suitable also for on-premise and multi-cloud environments. But API Management is for managing application APIs and Service Mesh is for managing Service to Service communication, security, Service Levels, and control. Similar services with different scopes.

- D is incorrect because App Engine Flexible Edition is a PaaS for microservices applications within Google Cloud.

Q No. 25 Case Study TerramEarth 2

In order to speed up the transmission, TerramEarth deployed 5g devices in their vehicles with the goal of achieving an unplanned vehicle downtime to a minimum.

But a set of older vehicles will be still using the old technology for a while.

So, on these vehicles, data is stored locally and can be accessed for analysis only when a vehicle is serviced. In this case, data is downloaded via a maintenance port.

You need to integrate this old procedure with the new one, building a workflow, in the simplest way.

Which of the following tools would you choose?

- Cloud Composer

- Cloud Interconnect

- Appengine

- Cloud Build

Correct Answer: A

A is correct. Cloud Composer is a fully managed workflow service that can author, schedule, and monitor pipelines that span across clouds and on-premises data centers.

B is wrong. Cloud Interconnect gives fast (10/100Gb) connections to your Google VPC. It is too expensive to connect the fields’ offices in this way.

C is wrong. Appengine is a PaaS, so you have to prepare a program for that. It is not simple at all.

D is wrong. Cloud Build is a service that builds tour code on GCP for deploy; any kind of code.

A Cloud Composer task, when started with automated commands, uses Cloud Identity-Aware Proxy for security, controls processing, and manage storage with Cloud Storage bucket.

In this way, it is possible in a simple, standard, and safe way to automate all the processes.

Once the files are correctly stored, a triggered procedure can start the new and integrated procedures.

For more details, please refer to the URLs below:

Reference

https://cloud.google.com/composer/

https://cloud.google.com/composer/docs/concepts/cloud-storage

- Top 45 Fresher Java Interview Questions - March 9, 2023

- 25 Free Practice Questions – GCP Certified Professional Cloud Architect - December 3, 2021

- 30 Free Questions – Google Cloud Certified Digital Leader Certification Exam - November 24, 2021

- 4 Types of Google Cloud Support Options for You - November 23, 2021

- APACHE STORM (2.2.0) – A Complete Guide - November 22, 2021

- Data Mining Vs Big Data – Find out the Best Differences - November 18, 2021

- Understanding MapReduce in Hadoop – Know how to get started - November 15, 2021

- What is Data Visualization? - October 22, 2021